The previous publication in Cosmonaut of Gluskhov’s biography, the Soviet designer on OGAS, attracted a lot of attention, and has inaugurated a discussion on planning. But before Gluskhov, there was another idea of a “big computer”, made by the pioneer of cybernetics in the USSR, Anatoly Kitov.

The popular scholarship on Kitov (and Gluskhov) is rather lacking, with only the recent books From Newspeak to Cyberspeak and How Not to Network a Nation providing some background, albeit flawed, to these thinkers. There are also shorter works in the specialized literature but behind heavy paywalls. On the internet, there is little material and his wikipedia page is unlegible, despite his rightful position as the pioneer of computation in the USSR. He was expelled from the party, and his texts were held as military secrets until rather recently.

To address this gap, inspired by the Spanish speaking group Cibcom, here we present two new translations. The first, is a short biography of Kitov, previously published in Novosti RIA. This piece talks about Kitov’s upbringing, education, scientific achievements as well as his plans to build computing systems that would plan the economy and warfare, and how his personality led to his conflict with the Soviet hierarchy. With this piece, we hope to reintroduce Kitov to unfamiliar readers.

The second is Kitov’s seminal paper, “The Key Features of Cybernetics” which was the first positive reference to this field published in the USSR. It contains three sections, which combine a scientific description of the basic concepts in information theory, as well as a critical analysis not only of cybernetics but of science in general that is very illustrative of the approach taken by Soviet scientists. Kitov approaches cybernetics critically, from a communist perspective, highlighting where “bourgeois” philosophy becomes too mechanical and must be enriched by dialectical materialism. As mentioned in his biography, Kitov authored the paper years before it was published, and later added two co-authors to this work.

When taken together, both documents give a good picture of what the reception of cybernetics in the late 50s/early 60s was, and they also recapitulate some of the political debates around planning in the USSR.

The Story of How the USSR Did Not Need the Pioneer of Cybernetics by Yury Revich

Anatoly Ivanovich Kitov can reasonably be considered to be the "father of Soviet cybernetics", although he had neither a transcendental position in the Soviet scientific hierarchy, nor the honorary regalia which the government bestowed on nuclear engineers and cosmonauts.

For Kitov’s claim to fame, it would be enough to just cite his book “Electronic Digital Computers”, published in 1956 and translated in many countries including the United States. It was from this monograph that many giants of national science, who later became famous in computing and adjacent fields, learned how computers (electronic computing machines - as computers were called in the USSR) worked. Among them are not only Viktor Glushkov, who became something like the “chief cyberneticist” of the USSR (and loved to emphasize his first acquaintance with computers from Kitov's book), but also academicians such as Keldysh, Berg, Kantorovich, Trapeznikov; surnames that speak for themselves.

But this book is by no means his only achievement. Anatoly Kitov was destined to be in the right place at the right time, and he was actively involved in shaping the very atmosphere of that time.

From anti-aircraft gunner to programmer

His father was a white officer (although from a working-class family) who, for obvious reasons, concealed his past. So although Kitov was born in Samara in 1920, a year later his family fled to Tashkent from possible persecution by the Bolshevik authorities and from the famine that engulfed the Volga region. In 1939 he graduated with honors from high school and entered the Central Asian State University in the physics and mathematics department.

But he was not destined to devote his life to nuclear physics as he had intended in his youth. In November 1939 Kitov was drafted into the army, first as a machine gunner and then as a cadet in the Leningrad anti-aircraft school. He served only a year. The war began, and the fledgling anti-aircraft gunner was sent to the Southern Front.

Unfortunately, there is not enough space here to describe Kitov's military adventures. In particular, he was even presented with the Order of Lenin for his brilliant command of an anti-aircraft division filling in for the sick captain, but in the turmoil of those days the nomination was lost, and many witnesses died.

In between battles, Kitov did not forget to study higher mathematics, and after the war he was sent to Moscow to enter the Artillery Military Academy of Engineering (now the Peter the Great Academy of Rocket Forces and Artillery). He immediately passed the exams for the first course and was admitted to the second.

After graduating from the academy Kitov followed the scientific path, becoming a referent of the Academy of Artillery Sciences. In the early 1950s, he became interested in new computers and managed to convince his superiors to send him as a representative of the Ministry of Defense to the SKB-245[1], which was then engaged in building one of the first domestic computer system Strela. In 1952 he defended his Master’s Thesis: “Programming of the outer ballistics problems for the long-range missiles”. Thus began the computer science era of Kitov’s life, which had crucial consequences for him and for the country.

Rehabilitation of the “reactionary pseudoscience”

In 1952 Kitov was appointed the head of the computer department he created at the Academy of Artillery Sciences. On this foundation, a computing center was created in 1954. This was one of the first in the USSR, the VC-1 of the Ministry of Defense, which was headed by Kitov until 1960, at the time of his conflict with the Ministry leadership.

As early as 1951 Kitov managed to read “Cybernetics” by Norbert Wiener in the special repository of SKB-245, a book that was then prohibited in the USSR. Wiener's work made a deep impression on the young scientist. He was one of the first to understand that a computer is not just a large calculator, but something quite new which could solve a huge range of problems that were not necessarily purely computational. These problems included management tasks up to the management of entire economic complexes.

But first, it was necessary to rehabilitate cybernetics (this term is not presently in use, it has been replaced by “computer science”) as a scientific field. Soviet ideologists were at pains to come up with derogatory definitions for this “reactionary pseudoscience”, as the 1954 Dictionary of Philosophy called it. “Servant of capitalism” was the mildest stigma (the phrase “capitalism's whore” also appeared in the press). Rehabilitation became possible after Stalin's death. From mid 1953 until 1955 Kitov, together with the mathematician Alexey Andreevich Lyapunov and other scientists, toured leading research institutes giving lectures on cybernetics to prepare the ground. It should be noted that many famous figures and officials were on the side of the “rehabilitators”, including some members of the ideological department of the Central Committee.

The final rehabilitation of cybernetics is associated with an article in the April 1955 issue of Voprosy Filosofii (Questions of Philosophy) titled “The Main Features of Cybernetics”. The article, which was written by Kitov himself long before its publication, was subsequently finalized with the participation of Lyapunov. To increase its authority, Academician Sobolev was invited as a co-author.

Soviet Internet

The main mission in Kitov’s life which was not brought to practical implementation was the development of a plan to create a computer network that would manage the national economy and solve military tasks (Unified State Network of Computer Centers - EGSVC in Russian). This plan was directly proposed by Anatoly Ivanovich to the highest authority. He sent a letter to Nikita Khrushchev, the General Secretary of the Communist Party of the Soviet Union, in January 1959. Receiving no response (even if the initiative was verbally supported in various circles), in the fall of that year he sent a new letter to the very top, attaching to it a 200-page detailed draft that became known as the “Red Book”. The consequences of his persistence were disastrous - Kitov was expelled from the party and removed from the position of head of the VC-1, which he created. He was in fact dismissed from the Armed Forces and left without the right to hold senior positions.

Why did this happen? There are several reasons. One of them, purely subjective, was that Kitov was not in the slightest degree a politician. The note he sent bypassed his direct superiors (which in the military, to put it mildly, is not customary) and began with criticism of the leadership of the Defense Ministry. After the Communist hierarchs sent Kitov's project to the Ministry of Defense for review, the scientist's fate was sealed. For those who do not know or do not remember the realities of the Communist regime, let us inform you: the expulsion of a person from the Party in those days was equal to a civil execution.

The second reason is deeper: a few years later Glushkov, who promoted a similar idea under the name of OGAS ("National Automated System of Information Retrieval and Processing"), was also faced with it. Surprisingly, the idea of automated control, which perfectly fit into the concept of planned economy (these initiatives have even caused a noticeable concern in the West), could not find support among the Soviet managers and economists. In his memoirs, Glushkov characterized the latter group with the words: “those who did not count anything at all”. These people instinctively understood that with the introduction of objective indicators and rigorous accounting systems, the power to “punish and pardon” would flow out of their hands. There would be no inspiring feats of development of the virgin lands, no “romance” of blocking the Siberian rivers. “Electronic brains” would say that the great undertakings “under the leadership of the party” are not profitable.

Glushkov was then told as follows: “Optimization methods and automated control systems are not needed, because the Party has its own management methods: for this purpose it consults with the people, for example, convenes a meeting of Stakhanovites or collective farmer-donors.”

Kitov’s military superiors were even more unprepared for the introduction of such innovations. They were fine with the use of computing aids to calculate the trajectories of ballistic missiles and the first satellites, as was done in the VC-1, but they thought that they could manage defense just fine. Many serious scientists did not respond to Kitov's ideas. The idea of entrusting an electronic machine to control people, and furthermore, on such a scale, was too unusual.

Kitov's project was, at first glance, much more realistic than the OGAS Glushkov proposed, which considered only the civilian economy, and Glushkov had honestly warned that the construction would stretch over three or four five years periods and cost more than the nuclear and space program combined (although he did not doubt its payback and efficiency). In order to reduce costs, Kitov proposed to create a dual-purpose system: in peacetime it would serve primarily to manage the national economy and in the event of war the computing power would rapidly be switched to the needs of the military. A specific feature of the project was the full autonomy of the main computer centers, which were supposed to be located in protected bunkers. All operations had to be carried out remotely, over a network.

It should be recalled that the first computer network in the West, as it is considered, worked only in 1965. This illustrates the main principle of Kitov's project in terms of competition with the United States: “overtaking without catching up”. As we know, later this principle was firmly rejected - in 1969 it was decided to copy the IBM System/360, a step many tend to consider disastrous for the Soviet computer industry (incidentally, both Kitov and Glushkov, and most other figures of the Soviet “computer engineering” opposed this, but they were not listened to).

In our country little is known about it, but in the early 1970s, the ideas of Kitov and Glushkov were taken over by the famous English cyberneticist, Stafford Beer. He enthusiastically joined the work to create an analogue of the OGAS in Chile, then led by socialist Salvador Allende. Beer did not have even a shadow of the capabilities that the powerful Soviet machine had - suffice it to say, there were only two computers for all of Chile. But Beer himself never doubted the feasibility of the project, which was only stopped by the Pinochet coup.

From today's perspective, we can name many more reasons why such global projects as EGSVC and OGAS would not have been implemented. But there is no doubt that Kitov anticipated a number of things that have become standard these days. These are enterprise management systems (EMS), technological processes (APCS), information systems of banks and trading companies, and much more. In general, the modern economy (as well as military affairs) is unthinkable without computer systems, including global information networks.

After the disaster

What happened to Kitov can be called a catastrophe only when viewed from outside. The morning after his expulsion from the Communist Party, his family was amazed to see him sitting at his desk, writing yet another scientific article. He did not despair: he continued to promote the ideas of the EGSVC. In 1963 he defended his doctoral thesis to the astonishment of many of his acquaintances and colleagues, who believed that Kitov had become a Doctor of Science a long time ago.

In the late 1950s, before his disgrace, Kitov was one of the developers of the parallelism principle, which was the basis of one of the fastest computers of that time, the M-100 (for the military). Later he promoted “associative programming” and was involved in the development of operating systems, the ALGEM programming language, participated in the release of the fundamental reference guide-encyclopedia “Automation of production and industrial electronics” and other initiatives, and wrote textbooks and monographs.

In the mid 1960s Kitov implemented automated management systems (AMS) in the Ministry of Radio Industry (in close cooperation with the same Glushkov), in the early 1970s he went to work at the Ministry of Health, where he became the founder of medical cybernetics. Kitov was also involved in international organizations, for example, he was the official representative of the USSR in the International Federation for Information Processing, and chaired the congresses of the International Federation for Medical Informatics.

The last stage of Kitov's life (since 1980) is associated with Pleshka (then the Institute of National Economy, now the Plekhanov Russian Academy of Economics), where he was head of the Computer Science Department.

Because of the conflict with the leadership of the Ministry of Defense, Kitov's name is little known to the general public. His work in VC-1 was classified and the documents on the EGSVC were also marked as “secret”. Only in recent years, mostly after his death in 2005, have Kitov's works become known. But now they are only of academic interest.

Key Features of Cybernetics by S.L. Sobolev, A.I. Kitov and A.A. Lyapunov.

The first positive article on cybernetics in the USSR Journal Questions of Philosophy No. 4, August, 1955 Scientific reports and publications. Article extracted from: https://www.computer-museum.ru/books/cybernetics.htm, translated by Michael A.

In compiling this paper we took into account discussions of papers on cybernetics delivered by the authors at the Power Engineering Institute of the USSR Academy of Sciences, at the Workshop on Machine Mathematics of the Faculty of Mechanics and Mathematics and at the Biology Department of Moscow University, at the Steklov Mathematical Institute, at the Institute of Precise Mechanics and Computer Engineering of the USSR Academy of Sciences, and also remarks of Prof. S. A. Yanovskiy, Prof. A. A. Feldbaum, S. A. Yablonov, M. M. Bakhmetyev, I. A. Poletaev, M. G. G. Galinaev. S. A. Yanovskaya, Prof. A. A. Feldbaum, S. A. Yablonsky, M. M. Bakhmetyev, I. A. Poletaev, M. G. Gaase-Rapoport, L. V. Krushinsky, O. V. Lupanov, and others. We take this opportunity to express our gratitude to all those who took part in the discussion.

The general scientific significance of cybernetics

Cybernetics is a new scientific field that has emerged in recent years. It is a collection of theories, hypotheses, and viewpoints relating to general issues of control and communication in automatic machines and living organisms.

This area of science is developing rapidly and does not yet constitute a sufficiently coherent and operational scientific discipline. At present, three main fields have developed within cybernetics, each of which is of great importance in its own right:

- Information theory, basically the statistical theory of message processing and transmission.

- The theory of fast-acting electronic computing machines as a theory of self-organizing logical processes, similar to human thought processes.

- The theory of automatic control systems, mainly feedback theory, involving the study of the processes of the nervous system, sense organs and other organs of living organisms from a functional point of view.

The mathematical apparatus of cybernetics is very broad: it includes, for example, probability theory, in particular random process theory, functional analysis, function theory, and mathematical logic.

A significant place in cybernetics is occupied by the concept/theory of information. Information is data about the results of certain events that were not known in advance. What is essential is that the actually received data is always only one of a certain number of possible variants of messages.

Cybernetics imbues the notion of information a very broad meaning, including both all kinds of external data that can be perceived or transmitted by any particular system, as well as data that can be produced within the system. In the latter case, the system serves as the source of the messages.

Information can be, for example, the effects of the external environment on an animal or human organism; knowledge and information acquired by human beings through learning; messages intended to be transmitted through a line of communication; raw intermediate and final data in computing machines, etc.

A new perspective has recently emerged from a study of processes in automatic devices. And this is no accident. Automatic devices are simple enough to not obscure the essence of processes by an abundance of detail, and, on the other hand, the very nature of the functions they perform requires a new approach. While the energetic characteristic of their work is, of course, important in itself, it does not at all touch the essence of the functions they perform. In order to understand the essence of their work, it is necessary, first of all, to base our understanding of the concept of information (data) on the movement of objects.

In the same way as the introduction of the concept of energy allowed us to consider all the phenomena of nature from a unified point of view and reject a number of false theories (phlogiston theory, perpetuum mobile, etc.), so the introduction of the concept of information, a single measure of information quantity, allows us to approach the study of various processes of interaction of bodies in nature from a single common point of view.

When considering the information conveyed by an impact, it must be emphasized that its character depends on both the impact and the body perceiving the impact. The impact of the source on the affected body does not in general occur directly, but through a series of partial impacts which mediate this relationship. The totality of the means by which the impact reaches the affected body is called an information transmission channel, or, briefly, a communication channel.

Common to all types of information is that information or messages are always given as some form of a time sequence, i.e. as a function of time.

The amount of information transmitted, and even more so the effect of the information on the recipient, is not determined by the amount of energy expended in transmitting the information. For example, a telephone conversation can be used to stop a factory, to call a fire brigade, to congratulate someone on a holiday. Nerve impulses from the senses to the brain can carry with them feelings of warmth or coldness, pleasure or danger.

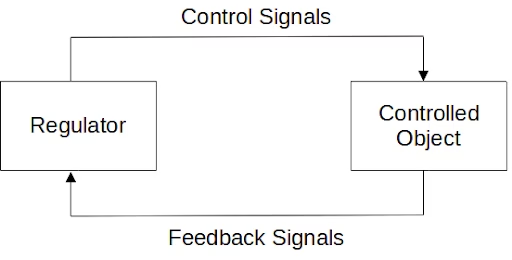

The essence of the principle of control is that the movement and action of large amounts of, or the transmission and transformation of large quantities of energy are guided and controlled by small amounts and small quantities of information-carrying energy. This principle of control underlies the organization and agency of any controlled system: automatic machines or living organisms. Therefore, the theory of information, which studies the laws of transmission and transformation of information (signals), is the basis of cybernetics, which studies the general principles of control and communication in automatic machines and living organisms. Any automatically controlled system consists of two main parts: the controlled object and the control system (regulator) - and is characterized by the presence of a closed information chain (Fig. 1).

From the controller to the object, information is transmitted in the form of control signals; in the controlled object, large amounts of energy (relative to the energy of the signals) are converted into work under the influence of the control signals. The information circuit is closed by feedback signals, which are information about the actual state of the controlled object coming from the object to the controller. The purpose of any controller is to convert the information describing the actual state of the object into control information, i.e. information that should determine the future behavior of the object. A controller is therefore a device for the transformation of information. The laws of information conversion are determined by the operating principles and the design of the controller.

In the simplest case, a controller can simply be a linear inverter in which the feedback signal indicating the deviation of the controlled object from the desired position - the error signal - is linearly converted into a control signal. The most complex examples of control systems are animal and human nervous systems. The feedback principle is also essential for these systems. When an action is performed, control signals are transmitted as nerve impulses from the brain to the final controlling body and finally cause muscle movement. The feedback line is represented by sensory signals and kinaesthetic muscle position signals which are transferred to the brain and describe the actual position of the actuators.

It has been established[2] that the processes taking place in closed feedback circuits of living organisms can be mathematically described and come close to the processes taking place in complex non-linear systems of automatic regulation of mechanical devices with regards to their characteristics.

In addition to the numerous and complex closed-loop feedback loops necessary for organisms to move and act in the external world, every living organism has a large number of complex and diverse internal feedback loops designed to maintain the normal condition of an organism (regulation of temperature, chemical composition, blood pressure, etc.). This system of internal regulation in living organisms is called a homeostat.

The basic characteristic of any controller as an information processing device is the replacement of the information conversion that is implemented by the controller.

These laws in different controllers can vary considerably, from linear transformation in the simplest mechanical systems to the most complex laws of human thought.

One of the main tasks of cybernetics is to study the principles of construction and operation of various controllers and to create a general theory of control, i.e. a general theory of information transformation in controllers. The mathematical basis for the creation of such a theory of information transformation is mathematical logic, a science that studies the relations between premises and consequences by methods of mathematics. In essence, mathematical logic provides a theoretical justification for information transformation methods as well, which justifies the close connection of mathematical logic and cybernetics.

On the basis of mathematical logic, numerous special applications of this science to various information processing systems have appeared and are now rapidly developing: theory of relay-contact circuits, theory of the synthesis of electronic computing and control circuits, theory of programming for electronic automatic calculating machines, etc.

The main task, which has to be solved during the development of a scheme of this or that information processing device, is the following: a certain set of possible input information and a function, defining dependence of output information on the input information, i.e. the volume of information to be processed and the law of its processing are specified. It is necessary to build an optimal scheme, which would provide a realization of this dependence, i.e. processing of given amount of information.

It is possible to imagine a solution to this problem where a separate circuit is built to implement each dependency, i.e. to transmit each possible variant of information. This is the simplest and least advantageous way of solving it. The task of theory consists in securing of transmission of given quantity of information by combination of such separate circuits with the help of minimum quantity of physical elements necessary for the construction of circuits. At the same time it is necessary to achieve reliability and noise immunity of the systems.

However, in practical engineering solutions to these problems it is not possible to realise fully optimal options. It is necessary to consider the feasibility of constructing machines from a certain number of standard assemblies and parts, without significantly increasing the number of different design variants too much in the pursuit of optimality.

It is a trade-off between the requirements of an optimal solution and the feasibility of the implementation of schemes, the task of assessing the quality of schemes and units derived from available standard parts, in terms of the extent to which these schemes approach the optimum solution, or how to use available standard parts and units in order to come as close as possible to an optimum solution.

The same is also true of programming for solving mathematical problems on fast-acting computing machines. Composing a program consists of determining the sequence of operations performed by the machine that will yield the solution to the problem. This will be explained in more detail below.

The requirement of optimal programming in terms of minimal machine running time is practically not fulfilled, because it is associated with too much work to compose each program. Therefore, program variants that are not too far removed from the optimal variants can be formed by more or less standard known techniques.

The problems considered here are special cases of the general problem solved by statistical information theory - the problem of the optimal way to transmit and transform information.

Information theory establishes the possibility of representing any information, regardless of its specific physical nature (including information given by continuous functions), in a unified way as a set of individual binary elements - the so-called quanta of information, that is, elements, each of which can have only one of two possible values: "yes" or "no".

Information theory deals with two main issues: a) the measurement of the quantity of information and b) the quality or reliability of the information. The former involves questions of the bandwidth and capacity of various information-processing systems; the latter involves questions of the reliability and immunity of these systems.

The amount of information provided by a source or transmitted in a given time via a channel is measured by the logarithm of the total number (n) of different possible equally likely versions of the information that could have been provided by that source or transmitted in a given time.

I = loga n (1)

The logarithmic measure is adopted based on the conditions of maintaining proportionality between the amount of information that can be transmitted in a period of time and the size of that period and between the amount of information that can be stored in a system and the number of physical elements (such as relays) needed to build that system. The choice of the logarithm base is determined by the choice of unit of measure for the quantity of information. With a base of two, the unit of information quantity is the simplest, elementary message about the result of choosing one of the two equally probable possibilities "yes" or "no". To denote this unit of information quantity, a special name "bid[3]" (from the initial letters of the term "binary digit"), was introduced.

The simplest particular case of determining the amount of information is when the individual possible variants of a message have the same probability.

Due to the mass nature of information, its statistical structure is introduced to our consideration. Individual variants of possible data, such as individual messages in communication theory, are not considered as given functions of time, but as a set of different possible variants defined together with their probabilities of occurrence.

In general, individual variants of data have different probabilities, and the amount of information in a message depends on the distribution of these probabilities.

The mathematical definition of the quantity of information is as follows. In probability theory, a complete system of events is a group of events A1,A2,....An, in which one and only one of these events necessarily occurs in each trial. For example, a roll of 1, 2, 3, 4, 5 or 6 on a die; head or tails on a coin toss. In the latter case there is a simple alternative, i.e. a pair of opposite events.

A finite scheme is a complete system of events A1,A2,....An, given together with their probabilities: P1,P2,....Pn

A=(A1,A2,....An; P1,P2,....Pn)

where:

k=1nPk=1 and Pk0 (2)

Any finite scheme is inherently uncertain to some degree, i.e. only the probabilities of possible events are known, but which event will actually occur is uncertain.

Information theory introduces the following characteristic to estimate the degree of uncertainty of any finite pattern of events:

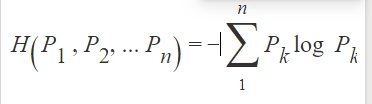

where logarithms can be taken at an arbitrary, but always the same, basis and where the entropy of Pk=0 is taken as Pklog Pk=0[4]. The value H is called the entropy of a given finite scheme of events (see B. Shannon[5], "Mathematical Theory of Communication". Translation collection "Transmission of electrical signals in the presence of disturbances". M 1953, and A. Y. Khinchin, "The Notion of Entropy in Probability Theory". Journal “Advances in Mathematical Sciences”. Т. 3. 1953). It has the following properties:

- The value H(P1,P2,....Pn) is continuous with respect to Pk.

- The value H(P1,P2,....Pn)=0 if and only if any of the numbers P1,P2,....Pn is equal to one and the others are zero, i.e. entropy is zero when there is no uncertainty in the final scheme.

- The value H(P1,P2,....Pn) has a maximum value when all Pk are equal to each other, i.e. when the final scheme has the largest uncertainty. In this case, as it is easy to see,

H(P1,P2,....Pn)= -k=1nPkloga Pk =loga n (4)

In addition, entropy has the property of additivity, i.e. the entropy of two independent finite circuits is equal to the sum of the entropies of these finite circuits.

Thus, it can be seen that the chosen entropy expression is quite convenient and fully characterizes the degree of uncertainty of a given finite pattern of events.

The theory of information proves that the only form that satisfies the three above properties is the accepted form for expressing entropy:

H= -k=1nPkloga Pk

Data on the results of a test where possible outcomes were determined by a given final scheme A represent some information that removes the uncertainty that existed before the test. Naturally, the greater the uncertainty in the final design, the more information we obtain from the test and the removal of that uncertainty. Since a characteristic of the degree of uncertainty of any finite scheme is the entropy of that finite scheme, it is reasonable to measure the amount of information provided by the test by the same quantity.

Thus, in the general case, the amount of information of any system that has different probabilities of possible outcomes is determined by the entropy of the coefficient scheme that characterizes the behavior of that system.

Since the unit of information is taken to be the most simple and uniform type of information, namely the message about the result of a choice between two equally likely options, the logarithmic basis in the entropy expression is taken to be two.

As can be seen from (4), in the case of a finite scheme with equal probability events, formula (1) is obtained as a special case of (2)

Information theory provides a very general method for assessing the quality of information, namely its reliability. Any information is considered as a result of two processes: a regular process designed to transmit the required information, and a random process caused by the action of interference. This approach to assessing the quality of various systems is common to a number of sciences: radio engineering, automatic control theory, communication theory, mathematical machine theory, etc.

Information theory proposes that the quality of information should not be measured by the ratio of the levels of the useful signal to the interference, but by the statistical method - the probability of getting the correct information.

Information theory studies the relationship between the quantity and quality of information; it investigates methods of transforming information in order to maximize the efficiency of various information processing systems and to find out the optimum principles for the construction of such systems.

Of great importance in information theory, for example, is the notion that the quantity of information can be increased by reducing the quality, and conversely, the quality of information can be improved by reducing the quantity of information transmitted.

In addition to broad scientific generalizations and the development of a new, unified approach to the study of various body-interaction processes, information theory also points to important practical ways of developing communication technology. For example, the methods developed on the basis of information theory for the reception of weak signals in the presence of interference that significantly exceeds the power of the received signals are extremely important at the present time. A promising way, indicated by information theory, is to improve the efficiency and reliability of communication lines by moving from the reception of individual, single signals to the reception and analysis of aggregates of these signals and even to the reception of entire messages at once. However, this path is currently still encountering serious practical difficulties, mainly related to the need to have sufficiently large and fast storage devices in communication equipment.

In the study of information, cybernetics combines common elements from various fields of science: communication theory, filter and anticipation theory, theory of tracking systems, theory of automatic control with feedback, theory of electronic counting machines, physiology, etc., treating the various objects of these sciences from a unified perspective as information processing and transmission systems.

There is no doubt that the creation of a general theory of automatically controlled systems and processes, the elucidation of general laws of control and communication in various organized systems, including in living organisms, will be of paramount importance for the further successful development of the complex of sciences. The main importance and value of the new scientific trend - cybernetics - lies in raising the question of creating a general theory of control and communication, generalizing the achievements and methods of various distinct fields of science.

The objective reasons that led to the emergence of cybernetics as a branch of science were the great achievements in the development of a whole complex of theoretical disciplines, such as the theory of automatic control and oscillations, the theory of electronic computing machines, the theory of communication, etc., and the high level of development of tools and methods of automation, which provided ample practical opportunities to create various automatic devices.

It is necessary to emphasize the great methodological importance of the question posed by cybernetics on the need to generalize, to combine in a broad way the results and achievements of different fields of science, developing in a certain sense in isolation from each other, such as physiology and automatics, theory of communication and statistical mechanics.

This isolation, separation of the individual fields of science, due primarily to differences in the specific physical objects of study, is reflected in different research methods and terminology, creating to some extent artificial divides between the individual fields of science.

At certain stages in the development of science, the interpenetration of different sciences, the exchange of achievements, experiences and their generalization is inevitable, and this should contribute to the rise of science to a new, higher level.

Opinions have been expressed about the need to limit the scope of the new theory mainly to the field of communication theory, on the grounds that broad generalizations could lead to harmful confusion at present. Such an approach cannot be considered to be correct. A number of concepts (in which cybernetics has played an important role) of general theoretical importance have already been identified. These include, first of all, the feedback principle, which plays a major role in the theory of automatic control and oscillation and is of great importance for physiology.

The idea of considering the statistical nature of information-system interaction is of general theoretical significance. For example, the concept of entropy in probability theory is of general theoretical significance, and its particular applications apply to the field of statistical thermodynamics as well as to the field of communication theory, and possibly to other fields as well. These general laws have an objective character and science cannot ignore them.

The new scientific field is still in its formative stages, and even the scope of the new theory has not yet been clearly defined; new data are coming in in a continuous flow. The value of the new theory lies in the broad generalization of the achievements of the various specific sciences and in the development of general principles and methods. The challenge is to ensure the successful development of the new scientific discipline in our country.

2. Electronic counting machines and the nervous system

Along with investigating and physically modeling the processes that take place in living beings, cybernetics is concerned with the development of more sophisticated and complex automata capable of performing some of the functions inherent in human thinking in its simplest forms.

It should be noted that methods of modeling and methods of analogies have been constantly applied in scientific research both in biological sciences and in science and engineering. At the present time, thanks to the development of science and technology there appeared a possibility to apply this method of analogies more deeply, to study the laws of activity of the nervous system, brain, and other human organs more deeply and completely by means of complicated electronic machines and devices and, on the other hand, to use principles and laws of vital functions of living organisms for the creation of more perfect automatic devices.

The fact that cybernetics takes on such challenges is undoubtedly a positive aspect of this field of great scientific and applied importance. Cybernetics notes the general analogy between the working principle of the nervous system and that of an automatic counting machine, which is the presence of self-organizing counting and logical thinking processes.

The basic principles of electronic computing machines are as follows.

A machine can perform several specific elementary operations: adding two numbers, subtracting, multiplying, dividing, comparing numbers by value, comparing numbers by signs, and some others. Each such operation is performed by the machine as the effect of one specific command which determines which operation on which numbers the machine is to perform and where the result of the operation is to be stored.

A sequence of such commands makes up the machine's work program. The program must be written by a human mathematician in advance and entered into the machine before the problem is solved, after which the entire solution of the problem is carried out by the machine automatically, without human intervention. To be entered into the machine, each program instruction is coded as a symbolic number, which the machine decodes accordingly during problem-solving, and the necessary instruction is executed.

An automatic computing machine has the ability to store – to remember – a large number of numbers (hundreds of thousands of numbers), output the numbers needed for the operation automatically during the solution process, and record the results of the operation again. The symbolic numbers, which signify the program, are stored in the machine in the same memory devices as the normal numbers.

The following two features are very important from the point of view of the principle of electronic computing machines:

- The machine has the ability to automatically change the course of the calculation process depending on the current calculation results obtained. Normally, program commands are executed by the machine in the order in which they are written in the program. However, even with manual calculations it is often necessary to change the course of calculations (e.g. the type of the calculation formula, the value of a certain constant, etc.), depending on what results are obtained during the calculations. This is ensured in a machine by the introduction of special transition operations which allows for choosing different ways of further calculations depending on previous results.

- Since the machine program, represented in the form of a sequence of symbolic numbers, is stored in the same memory unit of the machine, as conventional numbers, the machine can perform operations not only on conventional numbers, representing the values involved in solving the problem, but also on the symbolic numbers representing the program commands. This property of the machine ensures the possibility of transformation and repetition of the whole program or its separate parts in the process of calculations which provides a considerable reduction of initial program input into the machine and sharply reduces labor input into the process of program compilation.

These two principal features of electronic computing machines are the basis for the realization of a fully automatic computing process. They allow a machine to evaluate the result obtained in the process of computation by certain criteria and to work out for itself a program of further work, based only on some general initial principles laid down in the program initially entered into the machine.

These features represent the basic and most remarkable property of modern electronic computing machines, which provides broad possibilities of using machines also for solving logic problems, modeling logic circuits and processes, modeling various probabilistic processes and other applications. These possibilities are still far from being fully elucidated.

Thus, the basic principle in the operation of a counting machine is that there is always some self-organizing process which is determined, on the one hand, by the nature of the input data and the underlying principles of the initially entered program and, on the other hand, by the logical properties of the machine design itself.

The theory of such self-organizing processes, in particular those subject to the laws of formal logic, is primarily that part of the theory of electronic computing machines that cybernetics deals with.

In this relationship, cybernetics draws an analogy between the operation of a computing machine and the operation of the human brain in solving logical problems.

Cybernetics notes not only the analogy between the working principle of the nervous system and that of the counting machine in the presence of self-organizing counting and logical thinking processes but also the analogy between the working mechanism of the machine and the nervous system.

The whole process of a computing machine in solving any mathematical or logical problem consists of a huge number of consecutive binary choices, with the possibilities of subsequent choices being determined by the results of previous choices. Thus, the operation of a counting machine consists in implementing a long and continuous logical chain, each link of which can have only two values: "yes" or "no".

The specific conditions that occur each time an individual link is executed ensure that one of the two states is always a well-defined and unambiguous choice. This choice is determined by the initial task data, the solution program and the logical principles incorporated in the machine design.

This is particularly evident in the case of machines working with a binary number system.

In the binary number system, unlike the conventional decimal number system, the base of the system is not the number 10 but 2. Binary has only two digits, 0 and 1, and any number is represented as a sum of powers of two. For example 25 = 1×2⁴ + 1×2³ + 0×2² + 0×2¹ + 1×2⁰=11001.[6]

All actions in binary arithmetic are reduced to a series of binary choices.

It is not difficult to see that any operation on numbers written in binary is an operation to find individual digits of the result, that is, to find quantities taking only two values of 1 or 0, depending on the values of all the digits of each of the inputs.

Consequently, obtaining a result is reduced to calculating several functions taking two values based on arguments taking two values. It can be proven that any such function is represented as some polynomial of its arguments, that is, an expression consisting of combinations of these arguments connected by addition and multiplication. The multiplication of such numbers is obvious; as for addition, it must be understood conventionally, taking 1+1=0, that is, considering two to be equivalent to zero.

Instead of arithmetic addition, we can introduce another, "logical" addition, in which 1+1=1, and again by just a combination of two operations we get any so-called logical function of many variables.

This makes it easy to construct any logic machine circuit by combining two simple circuits, one performing addition and the other performing multiplication separately.

A logic machine therefore consists of elements taking two positions.

In other words, the machine device is a set of relays with two states: "on" and "off". At each stage of the calculation, each relay takes a certain position dictated by the positions of the group or all relays in the previous stage of the operation.

These stages of operation can be definitively 'synchronized' from a central synchronizer, or the action of each relay can be delayed until all relays that should have acted earlier in the process have passed all required cycles. Physically, relays can be different: mechanical, electromechanical, electrical, electronic, etc.

It is known that an animal's nervous system is known to contain elements that correspond to the operation of relays in their action.

These are what are known as neurons, or nerve cells. Although the structure of neurons and their properties are rather complex, they work according to the "yes" or "no" principle in their normal physiological state. Neurons are either resting or activated, and during activation they go through a series of stages almost independent of the nature and intensity of the stimulus. First comes the active phase, transmitted from one end of the neuron to the other at a certain rate, followed by the refractory period, during which the neuron is not activated. At the end of the refractory period the neuron remains inactive, but can already be activated again, i.e. the neuron can be considered as a relay with two states of activity.

With the exception of neurons that receive activation from free ends, or nerve endings, each neuron receives activation from other neurons at connection points called synapses. The number of these connection points varies from a few to many hundreds for different neurons.

The transition of a given neuron to the activated state will depend on the combination of incoming activation impulses from all its synapses and on the state the neuron was in before. If a neuron is not in an excitable state and in a non-refractory state, and the number of synapses from neighbor neurons that are in the activated state for a certain, very short period of coincidence exceeds a certain limit, then this neuron will be activated after a certain synaptic delay. This image of neuron activation is very simplistic.

The "limit" may depend not just on the number of synapses, but also on their "expectation" and their geometrical arrangement. In addition, there is evidence that there are synapses of a different nature, the so-called 'inhibition synapses', which either absolutely prevent the activation of a given neuron or raise the limit of its activation by conventional synapses.

However, it is clear that certain specific combinations of impulses from neighboring neurons that are in an activated state and have synaptic connections to a given neuron will drive that neuron into an activated state, while other neurons will not affect its state.

A very important function of the nervous system and computing machines is memory.

There are several types of memory available in computing machines. Volatile memory ensures that the data currently required for an operation is quickly stored and output. After a given operation has been performed, this memory can be cleared and thus prepared for the next operation. The volatile memory in machines is implemented by means of electronic trigger cells, electron beam tubes or electro-acoustic delay lines and other electronic or magnetic devices.

There is also a permanent memory for long-term storage in the machine for all data that will be needed in future operations. Permanent memory is implemented in machines by means of magnetic recordings on tape, drum or wire, by means of punched tape, punched cards, photographs and other methods.

Note that the brain, under normal conditions with respect to memory functions, is certainly not a complete analogy of a computing machine. A machine, for example, may solve each new task with a completely cleared memory, whereas the brain always retains more or less previous information.

Thus, the work of the nervous system, the process of thinking, includes a huge number of elementary acts of separate nerve cells/neurons. Each elementary act of a neuron's reaction to stimulus, neuron discharge, is like an elementary act of a computing machine, which has an opportunity to choose only one of two variants in each particular case.

The qualitative difference between the human thinking process and animal thinking is provided by the presence of the so-called second signal system, i.e. the system conditioned by the development of speech, the human language. Humans make extensive use of words in the process of thinking, and perceive words as stimuli; analysis and synthesis processes and abstract thinking processes are carried out with the help of words.

Electronic computing machines have some quite primitive semblance of a language - it is their system of commands, conditional numbers, the system of memory addresses, and the system of various signals implementing various conditional and unconditional transitions in the program, implementing the control of the machine's work. The presence of such a 'language' of the machine is what allows some logical processes particular to human thinking to be realized on the machine.

In general, cybernetics regards electronic computing machines as information processing systems.

In order to investigate the effectiveness and analyze expedient working principles as well as design forms of electronic counting machines, cybernetics proposes to take into account the statistical nature of incoming and processed information - mathematical problems, solution methods, initial data, and results of solutions.

This approach finds its analogy in the principles of the nervous system and brains of animals and humans, which interact with the external environment by developing conditioned reflexes and a process of learning, ultimately through a statistical accounting for external influences.

The principles of electronic computing machines make it possible to implement logical processes on these machines, similar to the process of developing conditioned reflexes in animals and humans.

The machine can be programmed to respond in a certain way when a certain signal is sent to the machine, and depending on how often the signal is sent, the machine will respond more or less reliably. If the signal is not given for a long time, the machine may forget the response.

Thus, a computing machine at work is more than just a group of interconnected relays and storages. The machine at work also includes the contents of its storage units, which are never completely erased in the process of computation.

The following statement by N. Wiener is interesting in this regard: "The mechanical brain does not secrete thought as the liver does bile, as has been written about it before, nor does it excrete it in the form of energy, as muscles excrete their energy. Information is information, not matter or energy. No materialism, which does not allow for this, can exist at the present time." Wiener emphasizes in this statement that the "thinking" capacity of a computing machine is not an organic property of the machine itself as a construction, but is determined by the information, in particular the program, which is entered into the machine by the human.

It is necessary to have a clear understanding of the fundamental, qualitative difference between human thought processes and the workings of a computing machine.

Due to the huge number of nerve cells, the human brain encapsulates such a great number of various elementary connections, conditionally reflexive and unconditionally reflexive combinations, which generate unique and most bizarre forms of creativity and abstract thinking, inexhaustible in their richness of variants, content and depth. I. P. Pavlov wrote that the human brain contains such a large number of elementary connections that a man uses hardly half of these possibilities in the course of his life.

However, a machine may have advantages over humans in the narrow specialization of its work. These advantages lie in the indefatigability, infallibility, flawless adherence to laid down operating principles, the underlying axioms of logical reasoning in solving specific human tasks. Electronic computing machines can simulate and realize only separate, narrowly directed processes of human thinking.

Thus, machines do not and certainly never will replace the human brain, just as a shovel or an excavator does not replace human hands, and cars or airplanes do not replace legs.

Electronic computing machines are tools for human thinking, just as other tools are tools for human physical labor. These tools extend the capacity of the human brain, freeing it from the most primitive and monotonous forms of thinking, as, for example, in carrying out calculation work, in reasoning and proof of formal logic, and finally, in carrying out various economic and statistical works (for example, scheduling trains, planning transport, supply, production, etc.). And as instruments of labor - thinking - electronic computing machines have boundless prospects for development. More and more complex and new human thought processes will be realized by means of electronic computing machines. But as far as the replacement of the brain by machines in concerned, their equivalence is unthinkable.

The structures of the brain and the computing machine are qualitatively different. The brain has a locally random structure despite the overall strict organization and specialization of the work of its individual parts. This means that the number of neurons as well as their mutual arrangement and connections can vary in each individual section to a certain extent randomly, while the distribution of functions and connections between individual sections of the brain is strict. Electronic computing machines nowadays cannot have any randomness in their connection schemes, composition of elements and operation.

In connection with this difference in the organization of the brain and the machine, there is also a significant difference in the reliability of their action.

The brain is an exceptionally robust organ. Failure of individual nerve cells does not affect the performance of the brain at all. In a machine, however, the failure of just one component out of hundreds of thousands, or the disruption of just one contact out of hundreds of thousands of contacts, can render the machine completely inoperable.

Further, the human brain itself is continually evolving through the process of creativity, and it is this capacity for infinite self-development that is the main distinguishing feature of the human brain, which will never be fully realized in a machine.

In the same way, the human brain's capacity for creativity: the broad and flexible classification and retrieval of images in memory, the establishment of stable feedback, and the analysis and synthesis of concepts, is virtually impossible for a machine to achieve in full.

The human brain is the creator of all the most complex and perfect machines, which, for all their complexity and perfection, are nothing more than tools of human labor, both physical and mental.

Thus, electronic counting machines can only represent an extremely crude, simplified scheme of thought processes. This scheme is analogous only to individual, narrowly focused human thought processes in their simplest forms, which do not contain elements of creativity.

But although there is a big difference between the brain and a computing machine, the creation and application of electronic computing machines to simulate the processes of higher nervous activity should be of the greatest importance to physiology. Until now, physiology has only been able to observe the workings of the brain. Now there is an opportunity to experiment, to create models, even of the crudest, most primitive thinking processes, and by examining the work of these models, to learn more deeply the laws of higher nervous activity. This means further development of the objective method of studying higher nervous activity, proposed by I.P. Pavlov.

By investigating the working principle of the nervous system and electronic computing machines, the principles of feedback in machines and living organisms, the functions of memory in machines and living things, cybernetics poses the question of the common and the different in the living organism and the machine in a new and generalized way.

This formulation of the problem, if rigorously and deeply observed, can yield far-reaching results in the fields of psychopathology, neuropathology, and the physiology of the nervous system.

It should be noted that reports on the development of some electronic physiological models have already been published in the press. For example, models have been developed to study the heart and its diseases. An electronic computing device has been developed which makes it possible for a blind person to read a normal printed text. This device reads letters and transmits them in the form of sound signals of different tones. After developing this device it was found that its circuitry resembles to a certain extent the set of connections in the human cerebral cortex, which is in charge of visual perception. Thus, electronic simulation methods are beginning to be practically applied in physiology. The task is, putting aside talk of 'pseudoscientificity' of cybernetics, which often covers up simple ignorance in science, to investigate limits of admissibility of such modelling, to reveal those limitations in operation of electronic computing systems, which are the most essential for correct representation of investigated thinking processes, and to set tasks to machine designers for creating new, more perfective models.

3. The application of cybernetics

Much attention is now being paid abroad to both theoretical and experimental research in the field of cybernetics. Complex automata that perform a variety of logical functions, in particular automata that can account for complex external environments and memorize their actions, are practically being developed and built.

The development of such automatic machines was made possible by the use of program-controlled electronic computing machines in automation systems. The use of electronic computing machines for automatic control and regulation marks a new stage in the development of automation. Until now automata, often highly complex, were built to operate under specific, predetermined conditions. These automatic machines had constant parameters and operated according to constant rules and laws of regulation or control.

The introduction of electronic computing machines into control systems makes it possible to carry out so-called optimum regulation, or regulation with preliminary estimation of possibilities. In this case, the computing machine, according to the data coming into it, characterizing the current state of the system and the external environment, calculates possible variants of the future behavior of the system with various methods of regulation, taking into account future changes in external conditions, obtained by extrapolation.

By analyzing the obtained solutions on the basis of some optimal control criterion (e.g. minimum control time), the computing machine selects the optimum variant taking into account the past behavior of the system. If necessary, such a control system can also change the parameters of the control system itself, ensuring the optimum course of the control process. The development of such automatic machines is of great economic and military importance.

Of particular importance is the problem of creating automatic machines that perform various human thinking functions.

A necessary condition of the application of electronic counting machines for mechanization of this or that field of mental work for the management of any process is a mathematical statement of the problem, the presence of a mathematical description of the process of a certain logical algorithm of a given work. Undoubtedly, such non-computational applications of automatic computing machines have paramount importance and unusually wide prospects of development as means for the expansion of cognitive possibilities of the human brain, for the arming of man with even more perfect instruments of labor, both physical and mental.

Examples of cybernetic technology include the automatic translation from one language to another by means of an electronic counting machine; compiling programs for computing on machines by means of the machines themselves; using electronic computing machines to design complex switching and control circuits, to control automatic factories, to plan and control rail and air traffic, etc.; creating special automatic machines for regulating street traffic, for reading to the blind, etc.

It should be noted that the development of the application of electronic computing machines in automatics is of great economic and military importance. By building such automatic machines and investigating their operation, it is possible to study the laws of construction of a whole class of automatic devices that may be applied in industry and in military affairs. For example, the literature (see Tele-Tech 153, 12, No. 8) gives a schematic diagram of a fully automatic plant that, thanks to a nuclear propulsion system, can operate independently for a long time, and also a diagram of a device for automatic control of firing from an aircraft at a flying target.

* * *It should be noted that until recently there has been a misinterpretation of cybernetics in our popular literature, silencing works on cybernetics, and ignoring even the practical achievements in this field. Cybernetics has been called nothing less than an idealistic pseudoscience.

There is no doubt, however, that the idea of studying and modeling the processes that occur in the human nervous system using automatic electronic systems is in itself profoundly materialistic, and advances in this field can only help to establish a materialistic worldview based on the latest advances in modern technology.

Some of our philosophers made a serious mistake: without investigating the essence of the issues, they began to deny the importance of the new direction in science mainly because of the sensational hype raised abroad around this direction, because some ignorant bourgeois journalists engaged in advertising and cheap speculation around cybernetics, and reactionary figures did their best to use the new direction in science in their class, reactionary interests. It is possible that the intensified reactionary, idealistic interpretation of cybernetics in the popular reactionary literature was deliberately orchestrated to disorient Soviet scientists and engineers, in order to slow down the development of a new and important scientific field in our country.

It should be noted that the author of cybernetics N. Wiener was unreasonably attributed statements about the fundamental hostility of automatics to man, about the need to replace workers with machines, as well as the need to extend the provisions of cybernetics to study the laws of social development and the history of human society in our press.

In fact, N. Winer, in his book Cybernetics (N. Wiener "Cybernetics". N. Y. 1948), says that in a capitalist society, where everything is valued with money and the principle of buying and selling dominates, machines can bring harm, not good, to man.

Further, Wiener writes that in a chaotic capitalist market, the development of automation will lead to a new industrial revolution that will render people with average intellectual capabilities superfluous and condemn them to extinction. And here Wiener writes that the solution lies in the creation of another society, a society where human life is valued in itself and not as an object of purchase and sale.

Finally, Wiener is very cautious about the possibility of applying cybernetics to the study of social phenomena, arguing that although a number of social phenomena and processes can be studied and explained in terms of information theory, human society, apart from statistical factors, has other forces at work which cannot be mathematically analysed, and periods of society in which there is a relative stability of conditions necessary to apply statistical research methods are too short and rare.

It should be noted that Wiener's Cybernetics contains a sharp criticism of capitalist society, although the author does not indicate a way out of the contradictions of capitalism and does not recognize a social revolution.

Foreign reactionary philosophers and writers seek to exploit cybernetics, like any new scientific trend, for their own class interests. By strenuously publicizing and often exaggerating the statements of individual cybernetic scientists about the achievements and prospects of automation, reactionary journalists and writers are carrying out the direct order of the capitalists to indoctrinate the ordinary people into thinking that they are inferior, that ordinary workers can be replaced by mechanical robots and thereby seek to belittle the activity of the working masses in the struggle against capitalist exploitation.

We must decisively expose this manifestation of a hostile ideology. Automation in a socialist society serves to make human labor easier and more productive.

We must also fight against the vulgarization of the analogy method in the study of higher nervous activity by rejecting simplistic, mechanistic interpretations of these questions and by carefully studying the limits of applicability of electronic and mechanical models and diagrams to represent thought processes.

Liked it? Take a second to support Cosmonaut on Patreon! At Cosmonaut Magazine we strive to create a culture of open debate and discussion. Please write to us at submissions@cosmonautmag.com if you have any criticism or commentary you would like to have published in our letters section.

- Special Design Bureau number 245, which would later become the Electronic Computing Research Center ↩

- see P. Gulyaev "What is biophysics", Journal “Science and Life” Nr. 1, 1955 ↩

- Trans. Note: Typo in the original ↩

- Adjusted formatting ↩

- Typo in original ↩

- Translator note: Formatting adjusted from the original. ↩