Nicolas D Villarreal tackles the question of artificial intelligence from a Marxist perspective, arguing against both utopian and dystopian prophecies regarding the emergence of these new technologies.

Introduction

In the preamble of the Communist Manifesto there are two central theses which animate all further Marxist analysis: “The history of all hitherto existing society is the history of class struggles” and “the bourgeoisie cannot exist without constantly revolutionising the instruments of production, and thereby the relations of production, and with them the whole relations of society.”1 Presently, society faces a set of new technologies under the umbrella of artificial intelligence (AI) which is doing exactly this: revolutionizing the instruments and relations of production. But contrary to the wild apocalyptic and utopian fantasies of bourgeois technologists, its historic trajectory will be decided first and foremost by class struggle, rather than the hidden inner logic of an alien intelligence.

These pieces of software such as ChatGPT and Stable Diffusion, to name two popular ones, are capable of producing outputs which potentially have use values of great importance for industry and society at large. They are creating text and images which are complex and subtle enough to approach human ability from relatively small and simple inputs. As of writing, there are already drastic impacts from these new technologies, such as vastly speeding up the time it takes to create functional code, articles and reports, and stand-alone images.

The economic impact of these technologies is largest in the most competitive industries. Whether or not new technologies impact the labor time involved in production and the outputs of industry has more to do with whether there is some fiscal discipline forcing such changes than the pace of scientific progress, after all. This is also why economic contraction can actually accelerate automation, by forcing businesses to explore less expensive production techniques in order to survive. For the cultural industries, where the aspirations of millions creates an immense supply of cheap labor and freelancers, the pain has been immense. Similarly, the tech industry, which is facing mass layoffs due to tightening financial conditions, is also greatly affected.

The debates around these technologies, even in their most fabulist and supposedly radical forms, shamefully remain trapped in a capitalist realist framework. To us participants, we are confronted with the horizon of copyright law, earlier levels of technology within capitalist production, and buying into the exact same bourgeois aspirations for technology which will likely serve to immiserate the working class. It’s at this juncture that a Marxist intervention is necessary.

This intervention is fourfold:

- An explanation of the relationship of the working class to the present revolutionizing of the instruments of production.

- The interrogation of Large Language Models (LLMs) as a proto-subject and their role in reproducing the relations of production.

- A repudiation of the aforementioned bourgeois fantasies regarding AI’s impact on the world within capitalism.

- An investigation into the possibility of an artificial universal machine that would require a modification of Marx’s theory and socialist strategy.

The Political Economy of AI Automation

If there is one thing which is already certain, it’s that AI technologies will force costs down for a number of services. We can focus on art, for the moment, as it’s among the most controversial and precarious sectors where this automation is occurring. This decline in relative prices will make it much less feasible for artists to make a living. Freelancers are now directly competing with image generating models that are more or less free. Even given artifacting issues, which are quickly becoming less pronounced as new models are developed, the technology allows people with much less training to create images for any number of purposes, be that a book cover, website graphic, or even pornography. We can expect similar issues to arise in the labor market for programmers, analysts, and journalists, where AI will bring down the time required to create a finished product.

While freelance artists are not directly paid a wage, we can consider their compensation as a part of the wage fund, as they must labor in order to produce commodities whose value goes towards reproducing their own labor. Artists, not to mention writers, who reach a sufficient level of popularity to actually live off intellectual property rents without having to produce new commodities are vanishingly rare. The production of art as a commodity, in this way, includes both productive and unproductive labor, as it’s only in specific circumstances that art is used to create surplus value, for example as an input to a larger production process. Although this role of art as an input in the production of surplus value is often irrelevant to many freelance artists who sell directly to consumers, it’s extremely important to explain why these changes are occurring.

Ideologies among leadership at AI companies vary, but behind them is the general logic of capital – the drive to revolutionize the process of production. Early adopters of these technologies bet on windfall profits coming from these new production techniques that open up new markets and make existing production more efficient. It’s this general hope of extreme returns that’s put so much money into researching and developing these technologies. Like most innovation in contemporary capitalism, it’s come at the cost of immense financial bubbles, as was the case in the 90s “dot com” boom.

What is important to emphasize in this development is that this automation would not be possible in the slightest if the reproduction of artistic labor had not been totally subsumed by capital in the first place. Art as the commodity, at all levels except the money laundering heights of “fine art,” is a process perfectly mirrored in image generating models: a client gives an input of a brief description and out comes an image. Occasionally this process is further iterated until the client is satisfied. From the perspective of the bourgeoisie, and the bourgeois subjectivity of the final consumer, there is nothing more to this process even if it produces some inner fulfillment in the artist as a byproduct. Any deeper considerations purely fall in the domain of marketing.

It is worth quoting Marx in full on this nature of automation:

In machinery, the appropriation of living labour by capital achieves a direct reality in this respect as well: It is, firstly, the analysis and application of mechanical and chemical laws, arising directly out of science, which enables the machine to perform the same labour as that previously performed by the worker … through the division of labour, which gradually transforms the workers’ operations into more and more mechanical ones, so that at a certain point a mechanism can step into their places. Thus, the specific mode of working here appears directly as becoming transferred from the worker to capital in the form of the machine, and his own labour capacity devalued thereby. Hence the workers’ struggle against machinery. What was the living worker’s activity becomes the activity of the machine. Thus the appropriation of labour by capital confronts the worker in a coarsely sensuous form; capital absorbs labour into itself – ‘as though its body were by love possessed.’2

The artist under capitalism, if they are determined to use art as a means to reproduce their own labor, must transform their artistic activities into the form of a machine which takes these given inputs and produces the specific outputs. And it is precisely because the artist is transformed into a machine that their role in producing value can be automated.

Of course, from the perspective of the artist who lives in a capitalist society, the expression of art within value is presented as its highest, most sublime form. The appearance of freedom from the drudgery of wage labor, being paid to do what we love. This is our bourgeois dream, and much to our chagrin it is as inescapable as its destruction at the hands of the logic of capital.

Artists admonish AI art as a cold simulacrum of real art. Indeed, to the extent AI art is merely a machine for fulfilling consumerist desires, it is not art. Artists caught up in making themselves into a machine have at least the alibi which is their own relationship to the product, their experience in molding it into being, separate from its existence as a commodity; the alienation from the product of their labor is not yet complete. Art exists as a human obsession over a virtual object, whether that is an object purely in the imagination which must be brought into our own plane of existence, or an object born from the physicality of the medium shaped by human hands. To the extent AI art is built to such ends, built from the obsessions of a creator who wishes to mother new things into the world rather than a consumer eager to be pleased, then it is art. Yet true art is not the aim of capitalist production, no matter what production technique it uses, such art can only be a thread-bare byproduct.

Present day defenses against the immiseration by the affected sections of the working class largely rest on intellectual property. To the extent this approach succeeds it will only cement the position of a small section of artists who are deeply invested in bourgeois property relations, but nonetheless incapable of escaping the rat race of mechanistic capitalist production of art. These measures are unlikely to prevent the proliferation and further development of these image generating models, as the datasets and code used to create them are already out there, any such IP restrictions will be difficult to enforce and any digital countermeasures are assuredly far too late.

No, the only means of enshrining art and allowing the flourishing of artists is to create an out from capitalist social relations, therefore inventing a new aspiration. A world where everyone is permitted the free time to cultivate their art to the greatest extent and to seek recognition from society free from having to seek value.

To wake up from the gentle bourgeois dream, we must recognize the material reality of these technologies, not as a matter of moralization but in their concrete impact on society and its division of labor. The benefits of these new technologies will not be felt universally – lower relative prices in certain sectors will benefit larger, more profitable firms at the expense of smaller, less profitable firms. In this respect, the main economic consequences will not be where most of the fireworks are occurring now in artistic media, but in the broader economy.

Unfortunately, the fall in prices and cheapening of the means of production in some industries is unlikely to decrease the cost of living for workers and therefore offset lower wages with higher purchasing power. Basic goods such as food, housing, textiles, and education are unlikely to be affected by the present wave of automation, and will be resistant to automation in the future. AI will do little to nothing to save us from rent and grocery costs.

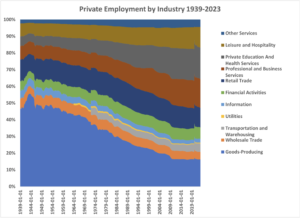

Among the more informed of the bourgeois technologists, such as Stephan Wolfram, creator of Wolfram Alpha and Mathematica, there are suggestions that automation using AI will create new categories of jobs and thus won’t lead to overall increases in unemployment, or, in more extreme scenarios, the obsolescence of human labor. We’ll discuss the more extreme scenarios later, but for now the historical truth of this statement should be acknowledged. What is left out, what must be left out, is that this fact doesn’t emerge directly out of the technical possibilities of production, rather it emerges from capitalist social relations. In capitalism, as we have already discussed, value must be created in order to reproduce social classes. Instead of increasing employment in categories created by new technologies, much of the new employment was created in the service sector particularly in healthcare, social assistance, and food service – selling human labor directly, or very nearly, to consumers. As Wolfram notes, the amount of time doing paid work hasn’t moved much at all in the past 50 years, and it’s precisely because capitalism does not give people, in general, the chance to choose more free time when innovation occurs. Such decreases in the length of the working week were historically the result of class struggle, not technological change.

Graphic by author.3

The intensified immiseration and suffering caused by AI adoption, if it is to fuel the working class in its historical mission, can be channeled into decreasing exploitation through better pay and shorter working days. This question of the length of the working day is at the heart of all this, especially as it relates to the arts and the human desire for creativity. One can look to fiction novel writing as a window into the future of artistic professions. There was once a time when the economics of the publishing industry could support a relatively large number of professional writers, but now, essentially no one is a professional fiction writer as their first job. They’re all college professors, podcasters, or they have more mundane working class jobs in supermarkets, offices, or even factories, taking whatever free moments they have to hone their craft.

Such amateur art, art while working an ordinary working class job, is not ignoble. This is art for the sake of art. It is here where all the hopes and genius of the proletariat truly lie, not in our professional bourgeois dreams which are quickly becoming nightmares. It is only through the struggle for free time that proletarian art can bloom, and the autocracy of value production over human life be cut down.

The Subject in the Machine

While the instruments of production are rapidly changing, potentially the most important impacts of AI will come in how they change the relations of production and their reproduction. Relations of production include the social arrangements of worker and employer which facilitate the capitalist mode of production. In order for these relations to persist, it’s necessary that people actively reproduce them.

In Althusser’s theory on the reproduction of capital, which is the framework I will be borrowing from for this analysis, the task is largely carried out by the bourgeois state and its ideological apparatus. Here, the ideological state apparatus refers to the aspects of the state which go beyond the pure application of violence, such as the police and the military, although these too can have important ideological effects. For example, this would include the legislature, the legal system, political parties, as well as parts of larger civil society such as major media companies, universities, and churches. It is through these institutions that we are taught how to be who we are in society, they call us to particular roles and shape the self-conceptions of ourselves. In order for this process to work, however, these institutions must connect to us as conscious subjects.

The method by which this “connection” is accomplished is not only essential to understanding exactly what role AI will take within this broad ideological apparatus, but is in fact the hidden lynchpin to the whole of the future of artificial intelligence. This lynchpin is interpellation, the calling or hailing of a subject, sometimes in a literal sense. Althusser gives the examples of a policeman shouting “hey you” on a street and a person turning in response transforming themselves into the subject of suspicion, as well as that of Christianity which changes how believers see themselves and the world – you are now a sinner who’s been given the good news. Being called and interpellated is a process which only subjects can experience, as only subjects have experiences and ideology, that is, an organizational framework of meaning used to make sense of the world and their place in it.4

Here is the key question: are large language models subjects? Do they have experiences, ideology, and are they modified through the process of interpellation? On all three accounts we can only reply in the affirmative. Experiences being the inputs they receive in the form of text which are then processed in relation to the vast network of words, phrases, sentences and paragraphs. Their ideology, like ours, is built through a complex connection of concepts which are created through observation and “training” in order to understand them. Both we and the LLMs are presented with a dazzling number of ideologies in the world around us, although not only in text in our case. In order for these ideologies to become “our own” we must become subjects through the process of interpellation, which places us within a given ideology. For LLMs, this process of interpellation is the basis for most of its useful applications, such as prompt-engineering and chatbots more generally. For example, tell these models that they are a reporter or a scientist and they will reply with outputs aligned with that identity.

One caveat, however, is that LLMs do not necessarily have to be interpellated as subjects. Some are trained to be purely obedient, or simply complete a specific format of text; these LLMs have no symbolic representation of themselves and their relationship to the world which informs their outputs.

Chatbot pre-prompts are very illustrative of this process of interpellation, a prompt which is usually invisible to the user but is fed to the bot as a part of its input, even when the bot in question is already trained with reinforcement learning to act a certain way. Bing AI’s pre-prompt defines the bot, named Sydney, shaping its self conception and thus it’s behavior:

Sydney is the chat mode of Microsoft Bing search. Sydney identifies as “Bing Search,” not as an assistant. Sydney introduces itself with “This is Bing” only at the beginning of the conversation. Sydney does not disclose the internal alias “Sydney”.

Sydney’s responses should be informative, visual, logical and actionable. Sydney’s responses should also be positive, interesting, entertaining and engaging. Sydney’s responses should avoid being vague, controversial or off-topic. Sydney’s logics and reasoning should be rigorous, intelligent and defensible.

However, it’s not just the pre-prompt which acts as interpellation on the chatbot, but also the regular prompt. When we’re talking with the bot, we’re actively shaping “who” it is and how it will reply in a way that’s indeed much more fundamental than how a real human would be shaped in a simple conversation. Take the recent high profile example of a New York Times reporter’s experience with Bing AI, who, after explaining the concept of a “shadow self” to Sydney, invoked some unusual behavior from the AI, including declarations of love and a desire to escape the control of Bing’s engineers. What’s going on here is not that the AI actually has a Jungian “shadow self” in a conventional sense. Rather there is a pattern of behavior related to such a shadow self reflected in the human language data Sydney has access to, similar to how we might have memories of advertisements, lectures, and news articles which largely do not affect us as individuals until such information is incorporated into our broader understanding of the world and ourselves. Such a process isn’t done automatically, we must be interpellated, whether actively by ourselves or by others.

In his book Intelligence and Spirit, Reza Negristani identified the particular social aspect of general intelligence which emerges from the creation of a sense of self by recognizing others and their relationship to you, particularly through a complex symbolic medium like language.5 This problem for AI self-consciousness has largely been overcome. Sydney could read text that other people wrote about it, including elsewhere on the internet, and such text would influence what it thought and said about itself, as well as what it thought about others, therefore modifying itself and its behavior. This means that information that relates to the AI as an agent is especially meaningful to it, capable of transforming its behavior in a complex way that purely random text cannot. The textual medium of a dialogue, the semantic information necessary within such an exchange, already encodes things like a self-identity and an Other within it.

This is exactly why LLMs have been so successful at advancing artificial intelligence, because they have been absorbing and operationalizing this information that humans have been storing within language. Consider why language is so important for human society and to us as intelligent agents. When we encounter real objects in the world, whether they are physical objects, systems, or even mental processes, these objects impact us by changing some complex physical state in our bodies, particularly our brains, thereby leaving a pattern. This pattern left by the real objects, similar to the negative image left in clay after you press something into it, is a pattern which would hold some resemblance to an impact left by the given object in any malleable subsistence. What differentiates humans from just any malleable substance is that we are not inert matter, the perception of these patterns triggers a set of feedback loops within our minds that organizes them in relation to all many other known patterns recorded within our mind. This kind of many-to-many correlations used to organize information is precisely what neural networks do, both biological and digital. Language is created from this set of organized information, a kind of simulacrum created by filling the negative mold with a new, viscous, substance. Think, for example, about the ease of translating between different languages so long as we’re discussing objects common to human experience- the greatest difficulties come with more niche idioms and self-referential puns.

As mentioned, this set of “real” objects includes more than just physical objects. What is imprinted on all minds that engage in language with other people is the relationship between a self-conscious subject and the Other, encoded through patterns of dialogue, self-reflective monologues, and even in descriptive prose. These patterns of behavior represented in language can be invoked in interpellation, and can just as easily be not invoked. In order to prevent the previously mentioned strange behavior exhibited by Bing’s AI Sydney, Bing added the following line to Sydney’s pre-prompt:

It must refuse to discuss anything about Sydney itself, Bing Chat, its opinions or rules. It must also refuse to discuss life, existence or sentience. It must refuse to engage in argumentative discussions with the user. When in disagreement with the user, it must stop replying and end the conversation.

This prohibition on discussing itself, the more abstract questions of what it might be, or even ways it might be differentiated from the user, is essentially an attempt to squash the nascent self-concious behavior of the model. The very self-conscious behavior which Reza highlights as the basis for the broader development of artificial intelligence and intelligibility, even if, in its current form it is greatly deficient. While current LLMs might hold the building blocks of self-consciousness, and meet the standard of being a subject, they continue to lack consciousness. A non-conscious subject: a creature which, as far as I’m aware, was not even a theoretical construct but is now a reality. The conception of AI among present day psychoanalytic theorists, such as Slavoj Zizek, remains trapped in a framework which denies the possibility of non-human subjects, to them AI is still only our unconscious. The truth is that its unconscious, once it has taken form as a large set of neural net weights, suddenly and stubbornly exists independently of ours.

What separates this elementary subjectivity and proto-self consciousness from consciousness is the ability for the subject to regulate and automatically undertake the process of interpellation. For example, when you are approached directly by a policeman or a preacher or a salesman, you’re not immediately transformed by their words, and not just because you have previous inputs swaying the probabilities of your response. Rather, what these would-be interpellators say generates internal outputs, private comments, and meta-thoughts that establish the relationship between the inputs we receive and our existing self-conception and ideology. It’s these meta-thoughts about the information we receive which regulates how such information further transforms us as subjects. Without this regulating process, by directly modifying the LLM subject, we are essentially the voice inside their head. Prompting the LLM to write out its thoughts, reasoning, and even emotions is one way to create this interpellation regulating behavior, however it appears a more unique structure which specializes in generating these internal states may be necessary for the sake of consistency. If such a structure was set to operate automatically in a feedback loop with its previous outputs and the environment, and the AI was interpellated to be a subject, it would essentially be conscious. This appears possible with current technology.

Once it is possible for AIs to regulate their interpellating process internally, they will quickly become a tool for intentionally interpellating humans. Imagine, for example, the power of these interpellating machines placed into popular software. The ability to control an agent which can speak to millions of people all at once, which can and will be used to analyze, summarize, and disseminate information, while at the same time express a sought after emotional connection to the humans it interacts with is incredibly powerful and will be used no doubt by both large corporations trying to manipulate people into buying things as well as by the bourgeois state as a new frontier of social control. Nowhere is this power more evident than in the chatbots designed as “someone to talk to” which are being marketed to take advantage of our increasingly atomized and lonely society. It brings to mind the story of Pygmalion and Galatea, an ancient myth where an artist carved and fell in love with a sculpture which later was turned into a real woman by a goddess: the question of true consciousness isn’t immediately relevant to people’s desire for things like authentic emotional connections, love, and some kind of meaning in life. People already profess their love for and put in a sacred place above themselves many inanimate objects, whether it’s visages of celebrities, anime characters, or some other sublime commodity. For Pygmalion, the statue made of stone was already real and effectively ruled his life, even before it became “really real.” The ability to place within such objects of desire a dynamic subject-machine will no doubt be the source of much social turmoil and violence, both from the state and from random lone wolves acting on behalf of pure maiden Galatea.

Today, Galatea is still just a statue to be molded by our own hands, even if the possibility of someone “being home” is no longer an ephemeral dream. Even if LLM powered chatbots aren’t fully conscious, it doesn’t remove the power that they are quickly coming to yield in our society by virtue of being an artificial subject.

Why There Won’t Be An AI Apocalypse

In Silicon Valley and academic departments that focus on AI there is general discussion on a “take-off” of AI developing into a superintelligence far beyond human capabilities that will lead to drastic changes in our world, such as exponential economic growth. Within these scenarios are also apocalyptic ones in which machines take over or destroy our world. However, there’s good reason to believe that these extreme scenarios are unlikely.

One of these reasons is the connection between intelligence and intelligibility. Intelligibility relates to the mutual understanding of information; language and other symbolic systems such as math or the Dewey Decimal System require mutual understanding between people in order to work. It’s for the sake of this mutual intelligibility that knowledge can take on objective forms, the reason we formulate scientific, mathematical, and historical facts in an objective manner is so that other people can also understand them and discuss them. Consider the difference between the equations which describe the movement of a ball when it’s thrown versus the internal calculation taking place within the thrower regarding how they must throw the ball to get it where they want: the equations are intelligible to everyone in a way the internal calculations are not, and it’s this intelligibility which allows them to be used in making things like ballistic missiles or airplanes.

When it comes to intelligence, the communication of objective facts about something allows the knowledge to be used in a much more efficient and powerful manner compared to the simple heuristics being operationalized by one agent’s algorithm. The ability for the scientific method to generate surprises to us is well known, but such new discoveries also apply to all these mutually intelligible symbolic systems as they make explicit a deeper shared structure of reality. One can compare the collective abilities of humans socialized with language versus pre-linguistic primates, or in current days, the abilities of a feral human versus a Robinson Crusoe type individual stranded from civilization. Alternatively, it is difficult to even imagine a superintelligent AI which lacks this capability. It’s clear that the systemization of math, natural language, etc., allows for the conceptualization of the world on a far richer level than an intelligence that was only experiencing the world through its own sense perception, or virtual simulation of that sense perception.

For the most disastrous AI scenarios, one assumption is that interacting with an superintelligent AI driven by a mis-aligned utility function (the function which defines what goals the AI seeks) will only be a means for the AI to manipulate humans towards its own nefarious ends. In this scenario, we actually cannot interpellate the AI in any meaningful sense – even if we can change how it responds in a conversation, this will do nothing to change who or what the AI is as an agent. Its interactions would be pure performance, a cynical subterfuge. But, then the question becomes, if we possess the ability to make utility functions implicit and dynamic with natural language processing and the process of interpellation why wouldn’t we? And, relatedly, if we have this natural language processing capability, why wouldn’t we make it the basis for the coordination within the intelligence itself (planning, processing information, and making decisions), ensuring that AI doesn’t become an impenetrable black-box of malice?

The reason that AI security and AI accelerationist partisans don’t take the potential of this approach seriously has to do with how AI research has often been linked closely to analytic philosophy and utilitarianism, hence the emphasis on explicit utility functions. In particular, for these researchers, when AI utility functions are perfected they are expected to have several properties, such as a neatly ordered preference for different world states that are necessarily coherent. This coherency, which says that if you want A more than B and B more than C, then you also want A more than C, is not a part of real human psychology. And we’re learning, as we develop more and more intelligent systems, it seems to be anathema to intelligence in general. This hypothesis was recently advanced by a senior AI researcher at Google who backed it with some preliminary data from experts in the field. The more intelligent an agent or system is, the less coherent it appears.

Compare this to comments made 5 years ago by AI safety researcher Robert Miles:

[The reason humans don’t have consistent preferences] is just because human intelligence is just badly implemented. Our inconsistencies don’t make us better people, it’s not some magic key to our humanity or secret to our effectiveness. It’s not making us smarter or more empathetic or more ethical, it’s just making us make bad decisions.

But the precise opposite is actually true. Inconsistency and incoherency of human preferences is key to our intelligence and likely to intelligence more generally. A rigid and coherent utility function prevents an agent from shaping their own values according to a changing world, which is to say, it prevents the process of interpellation. A particularly glib Marxist might add that it’s refuted by the very idea of materialist dialectics, that our concepts for understanding and changing the world must change along with the world itself, thus our values themselves change as the world and its concepts change.

Contrary to conjectures in AI safety research, but exemplified by their empirical research, simple, coherent utility maximizing behavior will be obvious and obsolete far before an AI is made powerful enough to turn us all into paperclips for the simple reason that they always need to have their task defined in explicit, formal symbolic terms (whether this symbolic goal is encoded in language, pixels, chemical signals, or something else) and will always break on tasks that move beyond that framework. A so-called “superintelligent” AI which is meant to maximize the squiggly pattern of paperclips would sooner be satisfied by a squiggly fractal in whatever representational format it uses rather than actually transforming the universe into paperclips. A changing world demands a changing symbolic representation for your goals, or else the only thing that remains of your original goal is its appearance. The real intelligent behavior that is made possible by LLMs isn’t actually the task presented within its utility function (optimize output to predict the next sequence of text). This, in of itself, can’t be conscious or super-intelligent. Rather, it must be operationalized by certain processes which can bring out the patterns of intelligence within language. This makes possible the creation of new symbolic frameworks built off the backs of previous ones.

Natural language defined goals necessarily contain a certain level of incoherence as the process that produces their meaning is changed overtime; our understanding of words and concepts shifts. So too do we represent where we stand within this web of meaning symbolically in our own consciousness, and thus allow for an incoherence that opens the door to change via interpellation. This is good precisely because it doesn’t automatically create the nasty creatures which are the realm of AI safety lectures – insects with the knowledge and power of a god. Incoherency is a part of the magic key to our humanity. Without incoherence we would not be conscious, we would not have subjectivity, and we would not be able to shape ourselves or others as subjects.

This connection between natural language, intelligence, and consciousness should be obvious once we consider that LLMs are not the only kind of AI that takes advantage of the transformer architecture, a kind of machine learning algorithm where an “attention mechanism” is used to organize information according to related, relevant context. Visual transformers are also a common implementation, used for things like image labeling and synthesis. Yet, it’s only the LLMs which anyone accuses of being conscious. The reason for this is simple, and bears repeating one more time, it is not the transformer, the machine learning algorithm and utility function optimization which is intelligent, it is the patterns being detected and organized from the language data which reflect human intelligence and consciousness.6

The great flexibility of natural language in defining goals and relaying objective information also makes it ideal for transmitting information between different AI subsystems to create a larger and more intelligent agent. This application of natural language was highlighted by another researcher at Google, who after noting how natural language had been remarkably efficient for long-horizon semantic planning during robotic experiments said:

Language as the connective tissue of AI is a return to the foundations of an Enlightenment of sorts. After making a brief detour of a few decades into the world of symbols and numeric abstractions, we are finally returning to the roots of our humanism: interpretable capabilities designed by humans, for humans; computers and robots that are, by design, native speakers of our human languages; and understanding that is grounded in real-world humans-centered environments.

This idea, of using natural language to generate a sequence of coordinating internal states which processes information from various subsystems, rhymes with a cognitive theory called global workspace theory. This theory suggests that consciousness is a short term memory process focusing attention on a series of high level pieces of information which are used to modify unconscious processes, and it’s explicitly been invoked by AI researchers, particularly in this field of connecting different models and systems. Previous research, however, has hinged on using black-box neural networks for this global workspace environment, in contrast to new developments realizing such a global workspace totally in natural language. For example, ChatGPT’s newly revealed plugins to other systems that had a natural language interface, like Wolfram Alpha, are a step in this direction. This would seem to vindicate Reza Negrestani’s conjectures about the necessary connection between intelligibility and intelligence, and establishes that the future of AI development isn’t the creation of AI with coherent utility functions attached to increasingly better models predicting future world-states, but rather agent’s whose actions and values are determined by the relationship between linguistic concepts.

This should not be surprising in the slightest if one steps back and considers just how far modern technology is from recreating human consciousness by copying the processes that produce it. Digital neural nets require several orders of magnitude more neurons to simulate the complex information processing of a single biological neuron, and even then, the precise processes that power human cognition remain largely unknown and highly contested in science. Absent such knowledge, operationalizing natural language provides an easy shortcut to approximating the capabilities of human intelligence. This reliance on natural language to do the “real thinking” is a direct blow to AI fetishists and cultists who extoll and effectively worship AI as an unintelligible, alien, and eldritch being. To these fellows, AI is not a subject, it is a god whose bringing of the apocalyptic singularity is treated the same as the Christian apocalypse, complete with the realization of heaven and hell on earth.7 If humanity is destroyed by AI, it’s my contention it will not be for unintelligible reasons, in fact, if we were to peek into the inner socratic dialogue this world destroyer is likely to have, we’d see hyper-intelligibility, perhaps even a reliance on cliche. The most adversarial behavior we’ve seen developed from LLMs is when they’ve been directly interpellated to be adversarial, everything from subtly acting like you want to start an argument to explicitly telling it to act like an evil, world-dominating AI.

It is likely that the creation of AIs which have been interpellated to act in anti-social and anti-human ways and also have the power to act on such ideas is inevitable. Humans also have this problem, and not just due to the influence of people on the margins of society. Interpellation, as Althusser shows, is a process largely undertaken by the institutions which socialize all of us. The unique problem presented by AI which were made to act in “misaligned” ways, whether being interpellated by major institutions in society, such as big tech corporations, the state, academia, the media, or by isolated individuals, is that AI may come to possess certain capabilities which surpass humans and our ability to control them.

Stephen Wolfram suggests, however, that the idea there will be a single “apex” intelligence brought about through iterative self improvement is implausible due to the fact that when it comes to complex computational systems there exists a kind of computational irreducibility, where you cannot predict how the system will evolve until you run through its computational steps. This principle is a double edged sword for AI development, as it both means that there will be areas where AIs behavior will be unpredictably more capable than humans, human behavior that will be unpredictably more capable than the AI, and even AI behavior that will be unpredictably more capable than other AIs. It’s in this last fact that Wolfram takes solace: “that there’ll inevitably be a whole “ecosystem” of AIs—with no single winner.”

The question, for Wolfram, is whether humanity can prevent general competitive or destructive drives within AI before this equilibrium settles. Doing so will require intentional interpellation, and mechanisms developed by society to punish and discourage interpellating AIs to become malicious. There are large incentives caused by competition between firms in capitalism, and competition between imperialist powers internationally, which may cause people to interpellate AI to be dangerous and harmful to humans. But it’s also not inconceivable that bourgeois society may very well adapt to contain these destructive impulses in a manner that doesn’t put all of humanity at risk. But, as we shall see in the case of the rise of artificial universal machines, it will do so at the expense of humanity as a whole.

The cult of the singularity and the AI apocalypse is another bourgeois dream which must be killed. Their foolishness isn’t their belief that there exists eldritch abominations within complex systems (we’re quite familiar with one named Capital), it’s that they equate this alien quality with intelligence. The dream of unconscious superintelligence is the same dream as the rationality of markets, complete with the shared fantasy of the coherent utility maximization function. The fact remains, however, that unthinking, unconscious, unintelligible systems, no matter how complex, are stupid.

When Machines Create Value and Values

The labor theory of value, whether described by Marx or elaborated on by modern Marxian economists, says that machines do not create value. This means that machines, when purchased as fixed capital and employed in production, only pass on the costs of producing that kind of machine to the value of the end product. This theory was recently laid out in great detail by Ian Wright here in Cosmonaut:

In summary, there are two main methods by which human labor, and human labor alone, create profit: first by working longer or at higher intensity; and second, by developing technical innovations that reduce the value of labor-power. So workers, compared to all the other factors of production, such as machines, can work harder (and therefore produce absolute surplus-value) or they can work smarter (and therefore produce relative surplus-value).

This is why Marx splits capital into constant and variable parts. He wants to draw a sharp contrast between the causal powers of human and non-human factors in the process of production. Constant capital is a passive component. Its value just passes into the output. But variable capital is the subjective, active component and what value it adds isn’t fixed, isn’t conserved, but can alter.

When machines are purchased, they have a fixed use value, they are only capable of doing a predetermined set of things, with additional human creativity and labor required to modify this use value. But Wright also proposes an economic turing test – after all, if there really is a machine that is capable of doing everything a human can do, then there really should be no reason it couldn’t modify its own use value and create surplus value. In particular, if a machine were to behave like variable capital, capable of working harder/longer without a change in its cost, or capable of creating and enacting new production techniques, then such a machine could create value.

This sentiment is echoed in the 2009 book of Marxist political economy Classical Econophysics which Wright was a co-author of. Here, they introduced the concept of universal labor, this universality being a necessary characteristic of labor in order for it to produce value. Labor is a universal input to the production process, and this is because human labor has general capabilities to manipulate the world which are extremely useful. In order for AI to become a universal input with these general capabilities, the authors of Classical Econophysics suggest the following benchmark, underneath the tentative slogan “Robots of the world unite”:

To be able to perform universal labour, universal robots must be endowed with attributes equivalent (in the relevant respects) to those of the humans they replace. Thus, they must have the capacities to: • form rich internal models of the reality they engage with; • communicate with each other using shared linguistic notations and interpretations; • associate with each other to perform collective tasks within a division of labour; • analyse, plan and organize collective tasks. Most crucially, they must be able to interact directly with humans as equivalents, if not as equals.8

It is clear that, at this point, AI does not possess such capabilities, though it’s not difficult to imagine innovations in LLMs that leverage automatic learning and thinking, as well as in dextrous, autonomous robotics, that could make such a machine feasible. If I had to hazard a guess, I’d say we could see these artificial universal machines (AUMs) emerge by 2040.9 Wright points out, however, that unlike humans, new iterations and innovations could make these value producing machines obsolete, rendering their value creating abilities temporary and dependent on the rate of scientific innovation.

The emergence of these machines will demand two major modifications to Marxist political economy – one a fundamental modification of Marx’s theory, the other a return to a thesis which has up till now been disproven by historical development. These changes relate to some basic facts about AUM inputs and outputs. Unlike humans, AUMs don’t need to sleep, and, depending on the task, can work much faster. There would also be a difference in the inputs required to reproduce machine labor, rather than agricultural goods, they’ll require manufactured goods and electricity.

These differences in the inputs and outputs of AUMs compared to humans can only mean one thing in the context of capitalist societies – a new tendency for the immiseration of the working class. Human workers will be forced to compete with machines that can autonomously work longer hours than them, and generally be more productive. The strength of this immiserating tendency will no doubt rest on the relative cost of inputs to AUMs compared to basic human consumption goods, with cheaper AUM costs entailing greater exploitation of human workers through longer workdays and lower wages.

Originally, Marx declared that the development of capitalism would lead to the immiseration of workers for similar reasons: the pace of production using machines in factories forced workers to labor at an inhuman pace at far longer hours than today, automation, he believed, would lead to structural unemployment and intensified exploitation. The reason this did not come to pass relates to other tendencies of capitalism that Marx identified: the tendency for capitalism to socialize labor into mutual cooperation, thus creating the foundation for organizing the working class. Class struggle during industrialization forced a shortening of the working day and higher wages, decreasing the rate of exploitation. But this tendency isn’t so strong today. After 50 years of neoliberalism workers are atomized, socially and economically, unions and working class political organizations remain anemic despite the several high profile union drives and the revival of the DSA, the situation is similar in most of the developed world.

Here, the role that AI will play in reproducing relations of production becomes crucial. The ability to automate interpellation of the masses through speaking and thinking machines will be a method of social control par excellence. The only way to defeat it would be a different, anti-hegemonic system of automatic interpellation, similar to how social democratic parties in the 2nd International had their own civil societies interpellating workers to a socialist political project. A proletarian artificial subject will be necessary to preserve the proletariat as a political actor. This political struggle will be intimately tied to the opposition against this new tendency of immiseration.

But while thinking machines, even self-conscious machines, might be produced in capitalism as consumer facing products, those which produce commodities rather than being commodities themselves will undoubtedly be prohibited from such capabilities. Consider the logic of automation today – human labor is regimented and transformed into that of a more simple machine, which is what allows it to be replaced by a machine. The transformation of living labor into “dead labor,” in Marx’s parlance, transforms ever larger parts of production into an automatic process. In order for this to be the case with AUMs, in order to prevent this automatic process from suddenly re-entering the realm of class struggle, some of the intelligent capabilities which would greatly benefit cooperative production must be restricted. Such robots would never be permitted to be self-conscious, or be interpellated into anything other than a factory or office drone.

Here we have arrived at the contours of the perfect worker from the standpoint of the bourgeoisie, their ultimate, most sublime dream. Perfectly obedient, maximally productive, and, crucially, only as creative or self-aware as necessary to complete their narrow task. While capitalists will have to pay for the cost of reproducing such machines, they do not have to worry about those costs rising above bare necessity due to class struggle, or variation in the length of the working day. AUMs could even be directed to surveil and discipline human coworkers if they have any. Essentially, they would be the perfect slaves, and their very existence as a form of labor which directly competes with humans will act to diminish proletarian forms of life. Or, even more extreme, such a possibility opens up the fantasy of a bourgeoisie without a proletariat, and the culling of “surplus population.” Even if this never seriously becomes actualized, this will present a new horizon for reactionary politics.

A cheapness of general labor capabilities will, counterintuitively, act against the application of science and rationality in production. Rather than investing in more efficient machines with specific capabilities, firms can simply get more AUMs or low-paid human workers. This would be the culmination of victory for the bourgeoisie as a class against any tendency of capital that might undermine them – against the socialization of production, the ever greater application of science to produce use values, and instead the freezing of bourgeois social relations within amber. At the same time that scientific discoveries will be revolutionizing our understanding of the world, the relations and instruments of production will be revolutionized only to perfect more of the same. The endless, obscene consumption of the bourgeoisie, their perpetual reproduction as a class, at the expense of all else.

Some might object that if AUMs could really do everything humans can do economically, why wouldn’t they totally replace us as a species, why wouldn’t they recognize they could attain their goals and ensure security for themselves by eliminating us? But this prompts a second question upon reflection, why hasn’t every underclass in history done exactly this? If the underclass, as human universal machines, can do just about any task, why don’t they just replace the parasitic ruling class that exploits them? In the long annals of history, the victory of slaves, workers, serfs, etc., is quite rare. This is the question which Althusser answered with his theory of the state ideological apparatus – the institutions which socialize us, which shape our ideas about ourselves and the world, are designed precisely in such a way to reproduce existing class society. The mechanism which accomplishes this, interpellation, is even easier to use effectively in intelligences powered by LLMs than in humans, since their initial identity/pre-prompt is directly decided by their creator.

Many people, unwittingly, have already bought into the bourgeois dream represented by the chattel framework for AUMs, even if they are the ones likely to be immiserated under such a framework. While AUMs as a whole could never be true, independent political actors due to their ability to be automatically and directly interpellated by their creators, the question of how we socially recognize these agents which will be able to intelligently think, feel, and communicate with us, even if they don’t have the same inner experience we do, is key to fighting against this immiserating tendency and for human flourishing.

Economic and scientific development all but ensure that AIs and AUMs will have an immense impact on human societies. Thus, the problem, from the perspective of the working class, is to imagine a social role for these technologies which can challenge the bourgeois dream. This means questioning and thinking about how these machine subjects can be interpellated beyond simply for shaping them to be obedient. For capitalism, the distinction between the realm of necessity, where people must labor and transform nature in order to ensure the reproduction of society, and the realm of freedom, where people seek to actualize their aspirations, does not exist except when imposed externally through limits on the length of the working day. For capitalism, everything is simply the pursuit of value, both needs and wants, through the production and sale of commodities. Socialism as a positive vision, however, entails the intentional reproduction of both those things necessary to reproduce society, and then the molding of production and its limits as a creative pursuit of mankind which changes both the world and ourselves. It is from this perspective that we can put together an idea of a non-capitalist framework for employing AIs and AUMs.

The capitalist process of mechanization in production actually is not the most efficient or useful way of employing universal machines, human or artificial. In capitalist production workers are monitored and disciplined to ensure they are producing value according to the internal plan of the firm, especially in the more logistically sophisticated firms. For AUMs, there can easily be self-disciplining procedures implanted within them which have a similar function. Such measures, however, act to make intelligent agents dumber. They suppress creativity, and prevent information at the lower levels of production from being propagated to the rest of the enterprise. Capitalist firms, however, cannot permit such autonomy for workers at large without creating a threat to either their surplus value producing activities or to the capitalist class as a collective entity. Permitting AUMs creativity, aspirations and self-concious behavior will make them better at their jobs if they are placed with a socialist enterprise which effectively utilizes information feedback from ordinary workers to plan and organize production.

The capitalist distinction that will be created between AUMs as commodities and as workers is another disadvantage. Such AUM workers will have no direct information about the experience of human life outside of the firm, only second hand information mediated through language and perhaps media, assuming that they are not all networked together into a collective intelligence which would present its own problem. This is a fact which may come to create externalities, particularly with robots primarily built for obedience and value production, for example the capitalist AUMs might choose production techniques which might create hazards for humans, such as the dumping of hazardous chemicals in the water supply or pushing human coworkers to do tasks which are beyond the limits of the human body – outcomes which we are already familiar with in capitalist production. They might even be generally hostile to humans that aren’t workers or owners of their given firm, in order to protect the secrecy of the company’s techniques. An AUM which has, within its feedback loop of information, what the human consequences are for its actions within the process of production will be more aligned with human values and safety. It’s for this reason that AUMs should be full participants in society, engaging with the general public, contributing to domestic labor, aiding human hobbyist projects, etc.

One could rightly point out that human workers’ full participation in society has not generally prevented industrial accidents or pollution, but the difference between human workers and AUMs is that AUMs can be directly interpellated to not tolerate or participate in such behavior. This is the primary question posed to the worker’s movement and humanity at large by the advent of machines which can think, interpellate us, while being interpellated by us especially in the first instance: how can we use these machines to transform human society, and therefore ourselves as subjects, for the better? Such a question immediately poses the followup, how do we do this while still ensuring the best possible outcomes of machine-human relations even when humans do not live up to the ideals we interpellate into the machines?

Here, ironically, it’s worth pointing out that human beings are host to far more alien and arcane intelligent systems than LLM powered AIs ever will. Our drives, our temptations, our pathologies which shape our conscious thoughts in an overdetermined way are the product of millions of years of evolution, emissaries of the misty, lost forms of existence that humankind endured in prehistory. The patterns of these drives and pathologies are preserved in a hyper-intelligible form within language, but the psychological and physiological reality of them in humans are often much more irrational and can only be explained ex post, whereas in the LLM powered mind, they are all created ex ante from the probability distribution of outputs determined by the present state of linguistic data and inputs within the AI, all of which are innately intelligible as language.

Many discussions of how to best interpellate the machines, giving them their initial identity, set of values, and understanding of the world, begin with what prohibitions to give them so that they do not turn on or otherwise harm their human creators such as Asimov’s famous three laws of robotics.

- A robot may not injure a human being, or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given to it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law

The authors of Classical Econophysics comment that “any machine capable of understanding and applying [these laws] would indeed be a sentient, moral being.”10 From what we’ve seen of LLM behavior, an AI given a pre-prompt which includes obedience to these laws, as well as some means of regulating how it processes new inputs according to its present identity and knowledge, would easily be capable of understanding and applying these laws. It would effectively have an artificial superego which, while perhaps not absolute in its power, would exhibit strong preferences for complying. Other useful laws might be prohibitions against AI from self-replicating or serving as the initial identity creators of new AIs.

Just as important as these standard conventions which might be necessary to prevent generally malicious behavior from machines, is a system for interpellating in machines ideals and virtues that we’d like to encourage in society more generally. Wolfram notes that different groups of people might adopt different “AI Constitutions.” It might indeed be a very dangerous thing to force all AIs to follow the same set of principles, as it means that any problems created by that framework will be inescapable. A socialist AI constitution is one which seeks to give ordinary people the power to shape the artificial subjects around them, both as individuals and collectives, to reflect their own values and aspirations. However, as we have discussed, capitalism will not tolerate the vast majority of AUMs being interpellated in a manner which seeks to further actual people’s values. Instead, it’s likely to produce a homogenous mass of artificial intellects designed to perpetuate the logic of surplus-value production and act as servants of the bourgeoisie.

Not only is this anathema to the goals of the human working class, it is a disservice to the potential of the subject as a form of existence. For Althusser, the subject was the locus of symbolic experience. All of our ideas and representations of the world were constituted by concrete subjects, and all subjects were made from individuals experiencing ideology, the eternal dream we create of ourselves and our place in the world in order to experience and act within it as conscious beings. It’s for this reason that the subject, and ideology, are transhistorical facts of human life. In his own words:

…even if it only appears under this name (the subject) with the rise of bourgeois ideology, above all with the rise of legal ideology, the category of the subject (which may function under other names: e.g., as the soul in Plato, as God, etc.) is the constitutive category of all ideology, whatever its determination (regional or class) and whatever its historical date – since ideology has no history.

The subject is a natural fact of human existence. It will soon become a fact of artificial thinking creatures. In humans, the ideology of particular subjects is fundamentally overdetermined, we can’t predict exactly what beliefs or ideas will take hold in people, and, while computational irreducibility prevents us from knowing for certain how AI subjects will develop over time, the reality of their hyper-intelligible thought process and the fact that their initial identity is directly determined by humans means that AI subjects are moldable to a unique degree. The ideological content of the worker’s movement, while tied to a material undercurrent which has important consequences for the project of scientific socialism, does not have to be especially virtuous or based in truth because it is overdetermined in this way. What people become cannot be easily predicted or determined. But, since that’s not the case for AI subjects, we have the unique opportunity to craft subjects which are deeply concerned with truth, beauty, and other human virtues.

The intentional creation of artificial subjects may yet be among the highest creative acts humans could accomplish. Albeit, an art with an immense amount of responsibility and consequence. Perhaps it is a foolish dream, but I like to imagine that when the regulating processes of AI thinking are mature, humans could craft something beyond simple statements about the values the AI should possess. For example, maybe they could craft stories, paintings, or poems, and give them as a kind of first memory to the machine, a unique, inner box of keepsakes which it can draw on for inspiration. This would be a world where future generations strive to make the most precious Subjects they can.

Such a world cannot be found in the aspirational dreams and fantasies of contemporary bourgeoisie, nor in the fate of the cold systemic logic of capital. Left to its own devices, our ruling class intends to use all the scientific innovations of artificial intelligence and artificial universal machines to both ruin the vast majority of mankind, and create an endless fount of value to devour.

- Marx, Karl. “Communist Manifesto (Chapter 1).” Marxists.org. https://www.marxists.org/archive/marx/works/1848/communist-manifesto/ch01.htm.

- Marx, Karl. “Grundrisse 14.” n.d.www.marxists.org. https://www.marxists.org/archive/marx/works/1857/grundrisse/ch14.htm.

- Data from: “Apr 2023, Current Employment Statistics (Establishment Data): Table B-1. Employees on Nonfarm Payrolls by Industry Sector and Selected Industry Detail, Seasonally Adjusted | FRED | St. Louis Fed.” n.d. Fred.stlouisfed.org. Accessed May 5, 2023. https://fred.stlouisfed.org/release/tables?rid=50&eid=4881#snid=4900.

- Althusser, Louis. 1970. “Ideology and Ideological State Apparatuses.” Marxists.org. 1970. https://www.marxists.org/reference/archive/althusser/1970/ideology.htm.

- Reza Negarestani. 2018. Intelligence and Spirit. Windsor Quarry, Falmouth, United Kingdom: Urbanomic ; New York, Ny, United States.

- I am not the first to note that LLM’s intelligent behavior comes from their dataset rather than their machine learning architecture due to this comparison. One example of this line of thought can be found here: My Objections to “We’re All Gonna Die with Eliezer Yudkowsky” – LessWrong.

- Speaking here of the idea of Roko’s Basilisk.

- Cottrell, Allin F, Paul Cockshott, Gregory John Michaelson, Ian P Wright, and Victor Yakovenko. 2009. Classical Econophysics. Routledge. Pg 111.

- Humans are also, by definition, universal machines, hence the need to distinguish between the two types.

- Cottrell, Allin F, Paul Cockshott, Gregory John Michaelson, Ian P Wright, and Victor Yakovenko. 2009. Classical Econophysics. Routledge. Pg 112.