Amelia Davenport introduces a text by William Grey Walter introducing basic concepts of cybernetics.

Introduction

Though little known outside the narrow circles of cybernetics, neurology and robotics, American born British polymath William Grey Walter revolutionized our world. A prodigy from early childhood, he built wireless radios at the age of 9, before widespread radio broadcasting had even reached Britain. Denied a research fellowship after graduating from King’s College in Cambridge, Grey Walter spent his early career performing applied neurophysiology research in hospitals and then the Burden Neurological Institute in Bristol. In this period, he conducted research in many countries including the United States and Soviet Union. Grey Walter’s career as an inventor took off as he developed an improved version of Hans Berger’s EEG (electroencephalograph) machine that allowed the detection of a greater variety of brain waves, from Alpha to Delta waves. The medical implications of his discoveries included using amplifiers and an oscilloscope to locate tumors in patients through their delta waves. During the Second World War, Grey Walter, like his fellow cybernetician Norbert Wiener, joined the fight against fascism, designing radar scanning and missile guidance systems. Grey Walter would become associated with several other prominent British cyberneticians, like the socialist economic planner and mystic Stafford Beer, neuroscientist Ross Ashby and therapist, logician and ecology pioneer Gregory Bateson.

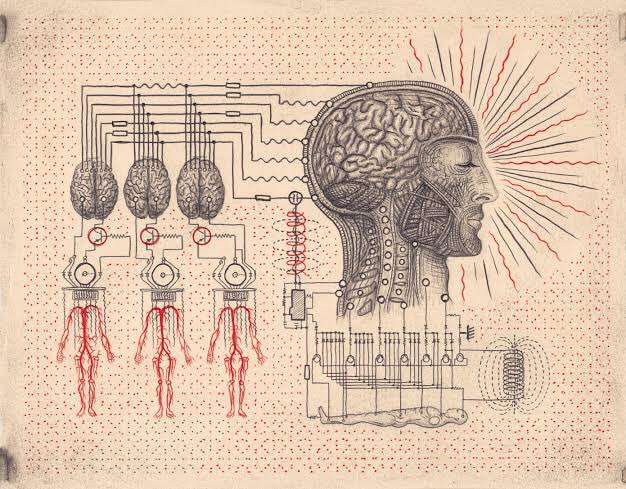

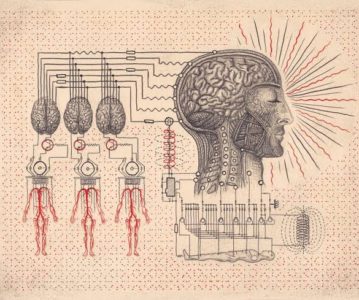

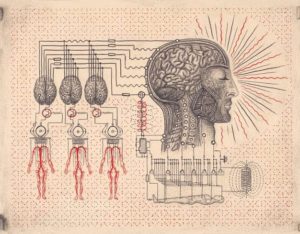

Between 1948 and 1949, Grey Walter introduced his greatest contribution to human knowledge. He constructed the first two autonomous robots known to exist. Named Elmer and Elsie (ELectroMEchanical Robot, Light-Sensitive), the “tortoises,” as Grey Walter called them, were designed to mimic the functions of a human brain according to a highly simplified model. Essentially, Grey Walter wanted to demonstrate that even very few brain cells, wired together correctly, could produce an extremely wide variety of complex behavior. While the turtles would not pass the Turing test (a famous benchmark for whether an artificial system can be called “intelligent” based on whether it can convincingly pose as a human or not), they could reasonably be called intelligent, if an outside observer was told they were “real” animals. Grey Walter would further develop the tortoise models with reflex circuits that allowed them to learn associations similar to Pavlov’s dogs. The invention of autonomous robots capable of learning, though an incredible feat, was not the summit of Grey Walter’s ambition; his goal was to help develop a better comprehensive understanding of the mind. For Grey Walter, invention is just one moment of the scientific process. This sets him apart from the “great inventors” of the capitalist type like Edison for whom the finished product and its specific utility are the end goal. As Grey Walter said in a lecture delivered at Cambridge some years later, “A scientific hypothesis, a space-ship, a nuclear reactor, as well as a poem or a picture, are not merely adequate replies to questions or challenges from the outside world; they are devices for asking questions, and the question is the creation.”

The essay below represents Grey Walter’s contribution to an anarchist zine, likely under the influence of his eldest son Nicholas Walter, a noted anarchist and peace activist. Walter’s own politics shifted from sympathy to Bolshevik communism to anarchism after the conclusion of WWII and experiences working in the USSR. The essay is intended to give the reader a basic overview of several important concepts and developments within the areas of cybernetics Grey Walter himself was most involved in advancing. Missing are crucial concepts like “autopoiesis” (the self-organization of systems), “the law of requisite variety” (how a system handles the complexity of its environment) and the idea of the homeostat. However, the concepts it does explore, it does masterfully. Grey Walter’s exploration of concepts like causality and teleology, feed-back, the use of toy models as an analytic tool, “all-or-none” responses, free will, habituation, and traffic control are woven into a single coherent narrative that breaks down the barrier between personal narrative and objective discussion. Of particular interest is the treatment of government systems with a cybernetic lens at the end of the essay. Grey Walter gives a glowing overview of the governmental structure of the United States as an apparatus for maintaining stability and preventing change while also discussing the means by which a unipolar autocracy might be able to achieve the same results, provided it is comfortable enough to not overtly undermine the expression of its citizenry. At first such a rosy picture from an anarchist seems out of place, but when looked at from the same perspective with which one should read the lifelong Republican Machiavelli’s The Prince, it becomes clear that Walter is providing his readers with the objective analysis of a detached scientist, not a normative view on the proper social order to establish.

Given the renewed interest cybernetics has gained on the left as a potential tool for organizing socialism, works like these are essential for helping clarify what cybernetics is and is not. It is not robots and fast computers. Cybernetics is a scientific research program, but it is more than that. It is a philosophy and an orientation to the world that implies a kind of ethics. Cybernetics is the art of piloting systems and the self through the chaos of reality. By familiarizing yourself with the ideas in this text, and others like Pickering’s The Cybernetic Brain, Stafford Beer’s Designing Freedom, and Norbert Wiener’s The Human Use of Human Beings, you might find that navigating the anarchy of our world becomes a bit easier

The Development and Significance of Cybernetics

In American academic circles the Greek letters Phi Beta Kappa have a particular meaning: they are the initials of a society of distinguished scholars, selected for their talent and attainment in the Arts or Sciences. These letters stand for a maxim which is generally supposed to mean: “Philosophy is the steersman of Life”.

During the last ten years the essentially ambiguous statement (and ambiguity is a common feature of classical maxims) might well be considered to have been received by inversion; for cybernetics, the art and science of control, has been claimed to provide a new and powerful philosophy in which the problems of physical, living and artificial systems may be seen as an intelligible whole. To what extent is this claim justified and from what has this school of thought developed?

Historically, the term cybernetics was first used in a general sense by Ampère in his classification of human knowledge as “la cybernétique: the science of government”. In etymology the term is, of course, cognate with government, gubernator being the Latinized form of the Greek for “steersman”. The re-introduction of the word into English by Norbert Wiener as the title of his book, published first in France and later in America, marked the beginning of the new epoch in which the problems of control and communication were explicitly defined as being common to animals, machines and societies, whether natural or artificial, living or inanimate.

The origin of Wiener’s interest in this development was the invention of electronic aids to computation toward the end of the war, combined with his personal contact with neurophysiologists who were investigating the mechanisms of nervous conduction and the control of muscular action. Wiener was at once impressed by the similarities of the problems posed by military devices for automatic missile control and those encountered in the reflex activity of the body. As a mathematician and scientist of international repute and wide culture Wiener was so powerfully repelled by the military applications of his skill as he was attracted by its beneficent use in human biology. In his second book “The Human Use of Human Beings” he develops his humanist, liberal ideas in application to social as well as physiological problems, in the hope that it may not be too late for the human species to find in machines the willing servants essential for prosperous and cultivated leisure. Writing at a time when the ignominious annihilation of a hundred million innocent bystanders is a calculated risk, as Wiener admits, this is a faint hope indeed.

Associated with Wiener in the first years of the cybernetic epoch were a number of American mathematicians, physicists, engineers, biologists, psychologists and medical men, and this interdisciplinary texture is, of course, the most striking feature of cybernetic groups. Within a short while of the publication of “Cybernetics” the Josiah Macy Jnr. Foundation organized the first of ten conferences on this subject, and the proceedings of the last five of these form an indispensable treatise on the widest range of subjects, including computer technology, semantics, brain physiology, psychiatry, artificial organisms and genetics. The factors common to all these topics may be found in the sub-title of the Macy publications: “Circular, Causal and Feed-back Mechanisms in Biological and Social Systems”. The phrase that has caught the ear of many listeners to such discourses is “feed-back mechanisms”, partly because the notion of feed-back has been invoked to account for a wide variety of natural phenomena and embodied in many artificial devices to replace or amplify human capacity.

To physiologists, feed-back is familiar under the name of reflex action, and the novelty of the concept in engineering is an indication of the youth and naiveté of that discipline. No free living organism could survive more than a few minutes without feed-back or reflexive action and this truth was embodied in the famous dictum of Claude Bernard “La fixité du milieu intérieur est la condition de la vie libre”. Freedom of action depends on internal stability, and this latter can be attained and maintained only by the operation of forces within the organism that detect tendencies to change in the environment and neutralize or diminish their influence on the internal state. The diagram illustrating this process of reflexive control or homeostasis could represent the mechanism of temperature control in a man or the position of a paramecium in a drop of water, or the ignition timing in a motorcar or the volume control in a radio or the water level in a domestic water closet. The first artificial reflexive system to be used in quantity was the rotating-weight speed-governor designed by James Watt and mathematically analyzed by J. Clerk Maxwell in 1868. The verbal description of such devices emphasizes their peculiar interests; in a steam engine with a governor the speed of the engine is controlled—by the speed, in a water closet the water level is controlled—by the water level, and so forth. What then controls what, and for what purpose?

The concept of purpose emerges inevitably at an early stage in such reflections and one of the interesting consequences of cybernetic thinking is that teleology, for so long excluded from biological philosophy, re-appears in a more reputable guise as a specification of dynamic stability. When scientific biology emerged from Pre-Darwinian natural history it became unfashionable to ask openly the question “what is this organ or function for?” Most biologists, being at heart quite normal human beings, still thought privately in terms of purpose and causality, but wrote and spoke publicly in guarded references to function and associations. The horrifying dullness of traditional scholastic biology is largely due to this superstitious fear of teleology which is in direct conflict with everyday life and makes the study of living processes as dreary as the conjugation of verbs in a dead language. At least in the physical sciences the distinction between the laws of nature and human purpose is useful and explicit.

The application of cybernetic principles to biology permits the classification of questions in the sense that in some cases it is legitimate to consider the purpose of a mechanism or a system when it can be shown to have a reflexive component. This criterion implies knowledge of what variables are limited, regulated or controlled and what would be the effect of their release from such control. Thus, in the case of the humble water closet, failure of the reflexive mechanism would leave the tank either empty or overflowing; the water level would seem to be the controlled variable and the ball-cock to control it. But the ball-cock is also controlled by the water-level. The flow of water might be a device for regulating the level of the float; our interpretation of the system depends on a priori or experimental evidence about the purpose of its design. Strangely enough, the introduction of purpose blurs the concept of causality. In a simple water tank without a ball cock arrangement we can assert quite confidently that the flow of water causes the tank to fill and overflow; if the tap is shut the tank will never fill, if it is open, however slightly, the tank will fill and finally overflow. In such a system, the causal relation is clear but the purpose is undefined; there is no statement or observation about what the tank is for, and the amount of water overflying will ultimately be exactly equal to the amount flowing in. Obviously the tank is a store or reservoir but its purpose is obscure. Now in the case of the reflexively controlled water tank, the purpose of the ball-cock is to control the water-level, but the circular relation (water level : ball-cock position : water-level) erases the arrow of causality. This example is so mundane and familiar that the principle it illustrates may seem trivial, but the distinctions between linear and circular processes and between purpose and causality are not limited to gross mechanical devices; consideration of their implications may help to resolve many basic paradoxes of philosophy.

Even if cybernetic development is regarded as essentially a branch of engineering rather than philosophy, the appearance of common principles in practical subjects as far apart as astronautics and epilepsy suggests that at least the artificial, academic boundaries between the faculties of physical science, biology, engineering and mathematics can be transcended with advantage and without risk of major error.

The fusion of traditionally detached topics is one of the big features of cybernetic thinking. This often appears in a practical form as the construction of models or analogues in which some abstract or theoretical proposition is embodied in “hardware”. The advantage of this procedure is that the ambiguity of vernacular language and the obscurity of unfamiliar mathematical expressions are both avoided. In the examples already given the assertions in words that “reflexive behavior gives an impression of purposefulness” or that “stability can be achieved by negative feedback” are all open to misunderstanding, particularly when translated into a foreign language. Verbal arguments about these propositions usually ends with the familiar disclaimer— “it depends on what you mean by . . .”. But when these propositions are embodied in working models their content is unequivocal and their implications are open to test and verification. Such models may be called “crystalized hypotheses”: they are pure, transparent and brittle. Purity in this sense is achieved by strict application of the principle of parsimony, associated in Britain with the name of William of Ockham to whom is attributed the maxim “entia non sunt multiplicanda praeter necessitate”. In a cybernetic model every component must have a strictly defined and visible function since all material components represent “entities” or terms in the basic theory. The transparency of such models derives in effect from their simplicity and the lack of needless embellishments and decorations; their function is to encourage the scientist to look through them at the problem. The third great advantage of a good model is that because of its simplicity and unambiguous design it is semantically brittle: when it fails it breaks neatly and does not bend and flow as words do. In this way the orderly and practical classification of complex phenomena can be based on pragmatic material experiment rather than on a verbal synthesis that may, and usually does, arise from a purely linguistic association.

Unfortunately, one conclusion to be drawn from this is that an article such as this one is really unsuitable as a vehicle for cybernetic ideas since it must commit just the errors that cybernetic thinking tries to avoid. Attempts have been made to overcome the deficiencies of conventional channels of communication but none has succeeded, and perhaps the most pressing task for cyberneticians is to work out a means of organizing themselves in a new way so that traditional frontiers between disciplines can be transformed into highways of intellectual commerce. The few text-books and monographs also are essentially traditional in format and presentation though they embody original and provocative ideas. For example the works of Ashby (“Design for a Brain” and “An Introduction to Cybernetics”) George (“The Brain as a Computer”), Cherry (“On Human Communication”), and the modest but well balanced “La Cybernetique” of Guilbaud are excellent treatises but all bear traces of the specialist training of the authors and also of their natural deficiencies in the fields strange to them. The fault is not in these individuals but rather in the structure of our western culture that demands academic specialization for survival. Even now it is difficult, if not impossible, for a talented young university student to study, for example, physics, mathematics, biology, and sociology for an honours degree, and until this is an accepted course cyberneticians will be essentially amateurs in all but one branch of their subject. The fact that it is still impossible to be a professional cybernetician (in the sense that one can be a professional physicist or biologist or mathematician) gives the domain an attractive character of freshness, enthusiasm—and sometimes irresponsibility. It is quite easy to speculate and conjecture about possible machines and even to sketch out a design for them, but quite often the report or rumor of such designs has grown into a legend of a real super-robot. We must remember that it is as easy for a speculative scientist’s sketch of an electronic fantasy to become a reputed master-machine as it was for a mariner’s fable to establish the sea-serpent. In these days of science-fiction turning to reality before our eyes there is a real danger of the myth-makers reporting dragons where there are only electronic tortoises.

In the English language at least these rather tiresome misunderstandings have often arisen because of the fashion for using the term “model” for hypothesis or theory for scheme. In the literature of cybernetics, it is worth examining every reference to a “model” carefully to see whether it refers to a real piece of machinery or merely to a schematic notion.

In many cases the absence of a working model is justified by the futility of building a costly machine to perform a function which can already be envisaged clearly in the “paper model”. The basic axiom invoked—and one that is indeed fundamental to cybernetics—is that any function or effect that can be defined can be imitated. This is taken to apply even to the highest nervous functions of human beings and the power of the axiom is seen when such functions have to be defined. A typical case is that of translating machines in which the function would appear to be simply to transpose information from one code or language to another. The information in, say, an English—Russian dictionary can easily be transferred to an electronic computer and a program compiled to ensure that whenever a word in one language is presented to the computer the corresponding word in the other language is typed out. The outcome is explicit and inevitable if the term “translation” is defined this way as a one-to-one relation of words in the two languages. But everyone knows that for many of the words in such a dictionary there are several possible meanings, so the output of the computer would consist not of one word for each presented, but several words or even phrases. Furthermore, there may be no equivalent at all for some words.

The lesson here is that language, even in its most commonplace usage, is not deterministic but probabilistic: the information conveyed in any particular message depends on the foregoing and succeeding messages as well as on what else might have been said. The introduction of such notions of statistical probability into what they previously considered essentially logical situations is another of the important theories of cybernetics. On the mathematical side cybernetic principles are seen also in the contemporary approach sometimes described as “Finite Mathematics” in which limited concepts of sets, binary matrices and conditional probability are considered as including the special cases of conventional algebra and arithmetic.

The elegance of binary arithmetic as a practical implement from the obscurity of Boolean algebra is another significant example of bio-mathematical convergence. One of the great achievements of neuro-physiologists in the early part of the 20th Century was the establishment of the All-or none Law for excitable tissues such as the heart, muscle fibers and nerve fibers. Careful experiment showed that a single cell in heart, muscle or nerve could respond to a stimulus in only one way, by a unit impulse discharge of standard size, duration and velocity of propagation. A stronger stimulus might elicit a larger number of unit impulses but they would always be the same size.

If the transmission of nerve impulses is considered as a language then it is a language with only one word—“yes”. This poverty of vocabulary has several important implications; the system must be non-linear, or in physiological terms, has a threshold, a level of stimulation below which no effect is produced and above which the unit impulse appears. The mathematical representation of this relation would be a “step-function” in which there is an abrupt change in an ordinate value at some point along the abscissa. Another implication is that for the impulses in any given nerve channel to convey any specific information, the source of the stimulus must have a predetermined relation to the destination of the nerve channel. Physiologically, the nerve from, say the eye to the brain, will indicate light however and by whatever is stimulated. The concepts of all-or-none response threshold and local sign are fundamental to neurophysiology and were accepted many years before the corresponding notions emerged in the cybernetic consideration of communication and computation.

Another factor common to biological and cybernetic systems is large numbers of elements. In the nervous system there are nerve cells with their processes the nerve fibres, while in an artificial device they are most likely to be a non-linear component such as a pair of valves or transistors to provide the appropriate unit impulse or binary digit. The provision of very large numbers of elements is again familiar in biology though novel in artificial systems. The cells in the body are counted in millions and in the human brain alone there are about ten thousand million nerve cells, but this number, vast though it is, is not the significant one in relation to brain function; it is the enormously greater number of ways in which these elements can interact with one another that indicates the scale of cerebral capacity. In artificial machines the number of elements does not yet approach that of the brain cells, but their speed of operation can be very much greater. The unit impulse of a brain cell or neuron lasts about one millisecond and the maximum discharge rate is rarely more than a few hundreds per second. In modern computers the pulses are more than a thousand times shorter and their frequencies of discharge are measured in millions per second. The rate of working of artificial systems can be enormously greater than living ones and it is commonplace for a calculation that would take a human mathematical prodigy several minutes, to be completed in one thousandth of a second by an electronic computer.

“Spontaneous” activity is generally considered as undesirable in a machine, but this is the principle feature of living animals from the unicellular protozoa to man, and no artificial system can be considered lifelike unless it displays some tendency to explore its surroundings. The illustration of this property was one of the main functions of the first “artificial animal” Machina Speculatrix which contained only two neurons, two sense organs and two effectors. The origin of this creature can best be described in terms of my own personal difficulty in envisaging the mechanics of reflexive behavior. As a physiologist my professional working hypothesis is that all behavior (including the highest human functions) can be described in terms of physiological mechanism. In trying to establish the principles on which such descriptions could be based I

found great difficulty in deciding how complex the basic mechanism must be. Obviously, a single cell with only one function is trivial and inert unless stimulated. When two are included in the system so that they can interact freely however the whole situation is transformed at once. Where the single element system has only two modes of existence, on and off, the two element system has seven.

Now, in order to couple this system to its surroundings some sensory modalities were necessary and two that convey the simplest direct information are light and touch. But even when provided with a photo electric “eye” and a sensitive “skin” the creature was passive unless stimulated and no more lifelike than a telephone or a pithed frog. In order to give it “life” I provided it with two effectors, a motor to drive it across the ground and another to provide a rotary scanning motion for the eye and the driving wheel. With these additions the behavior of the model at once began to resemble that of a single protozoan; it explored all the accessible space, moving toward moderate lights and avoiding bright ones, avoiding or circumventing obstacles. Several other features emerged also (and this is one of the striking results of such essays in the imitation of life). If I had thought more clearly I might have foreseen these effects but I did not, and the fact that my thinking needed stimulus and demonstration of the real model indicates the limitations of the experimental mind, the practical value of constant interaction between thinking and observing.

The first surprising effect of providing the model with a scanning eye was that, when provided with two exactly equal and equidistant light stimuli, it did not hesitate or crawl half-way between them but always went to first one and then toward the other if the first was too bright and close quarters. This was obviously a free choice between two equal alternatives, the evidence of free will required by scholastic philosophers.

The explanation of this exhibition of what seems to some people a supernatural capacity, is simple and explicit: the rotary scansion converts spatial patterns into temporal sequences and on the scale of time there can be no symmetry. Simple though this explanation may be, the philosophic inferences are worth pondering—they suggest that the appearance of free-will is related to transformation of space to time-dimensions, and that the difficulties that seemed to impress the scholastic philosophers arose from their preoccupation with geometric analogy and logical propositions.

Another behavior mode that surprised me was related to the inclusion in the scanning circuit of an electric lamp to indicate when the scanner was switched off. The system is guided to a light by the disconnection or inhibition of the scanning motor when an adequate light enters the photo cell; sometimes the scanner would jam mechanically and it was hard to distinguish this trivial mechanical disorder from a relevant response. The pilot lamp was added to provide a sort of clinical sign to aid diagnosis or fault-finding. One evening the model wandered out into the hall of my house where there happened to be a mirror leaning against the wall. We heard a peculiar high squeaking sound that the model had never made before, and thinking that it must be seriously unwell we rushed out to help it. We found it dancing and squealing in front of the mirror; it had responded to its own pilot-light but in doing so had turned the light out, thus abolishing the stimulus so the light came on again and so on. The positive feedback or reflex through the environment generated a unique oscillatory state of self-recognition. If I had no prior knowledge of the machine’s structure and function and had assumed it was alive I should have attributed to it the power to identify a special class with one member—itself.

Similar but much more complicated effects are seen with a population of several such creatures. Each can “see” the others’ lights, but in responding to them extinguishes its own, so that yet another semi-stable state appears in which aggregates of individuals form and dissolve in intricate patterns of attraction, indifference, and —when two touch—repulsion. If the boundaries of the working space for this co-operative population are constricted, another state is produced in which contacts between individuals and with the barriers become so frequent that a “population pressure” can be measured. This supervenes quite suddenly and at the same time the responses to light (which are suppressed by the touch stimuli) disappear. The population as a whole is then inaccessible and aggressive, while the state of free aggregation with less constructive boundaries individuals could respond independently to a common stimulus: the common goal transforms a co-operative aggregation into a competitive congregation.

These complex patterns of behavior are recounted here to illustrate the value of precise definition and material imitation. If, for example, free-will is thought to be something more than a process embodied in M. speculatrix then it must be defined in terms other than the ability to choose between equal alternatives. If self-identification is more than reflexive action through the environment then its definition must include more than cogito, ergo sum.

The relative modesty of cybernetic achievement (the early claims and promises were certainly over-dramatized) has produced various splinter-groups, some tending toward a more philosophical or at least theoretical position, others concerned with strictly practical application. Among the latter, one of the intriguing titles is “Bionics”, a group in which the precedence and possible superiority of living systems is accepted, with the aim of using ideas gained from the study of real living processes to construct artificial systems with equivalent but superior performance. Thus, a man can easily learn to recognize the appropriate patterns, even when they are partly obscured, must be quite complex and carefully adjusted. If we knew more about how we learn to recognize and complete patterns we could make pattern-recognizing machines more easily and these could operate in situations (such as cosmic exploration) where men would be uncomfortable or more concerned with other problems.

A brief analysis of one cybernetic approach to problems of learning recognition and decision has several interesting corollaries. One is that, even in the metal, such a system provides ample scope for diversity of temperament, disposition, character and personality. In material practice even very simple machines of this type differ very much from one another, even if they are designed to a close specification, and furthermore these differences tend to be cumulatively amplified by experience. In mass-produced passive machines, such as automobiles, individual differences are treated as faults, and are usually minimized by statistical quality control. Even at this level, however, individual characters do appear and particularly when they involve a reflexive sub-system, also tend to increase with wear, which is the equivalent of experience in a passive machine.

Studies of this nature are now in progress in several centers of research. One of the important inferences from the simple models of learning is that the far more complex living systems information from the various receptors (eyes, ears, akin and so forth) must be diffusely projected to wide regions of the brain as a part of the preliminary selective procedure. The extent of diffuse projection in the human brain is really astonishing; nearly all parts of the frontal lobes are involved in nearly all sensory integration, and with very short delays. The non-specific responses in these mysterious and typically human brain regions are often larger and always more widespread than those in the specific receiving areas for the particular sense organs. They also have another very interesting and important property which the specific responses do not show at all, and this is perhaps one of the most fundamental attributes of intelligent machinery, whether in the flesh or in the metal—habituation.

If a stimulus is applied monotonously and without variation in background, the diffuse responses in non-specific brain areas diminish progressively in size until after perhaps fifty repetitions they are invisible against the background of spontaneous intrinsic activity, even with methods of analysis that permit detection of signals much smaller than the background “noise”. This process of habituation is highly contingent however; a small change in the character or rhythm of the stimulus or in its relation to the background activity will immediately restore the response. Interestingly enough the change needed to re-establish significance may be a diminution in intensity; a series of loud auditory stimuli may result in complete habituation after a few minutes but if the same stimulus is given at a very low intensity the response may reappear at a high level. The same effect is seen with any novelty in rhythm or tempo and the conclusion is that, as predicted from the cybernetic model, the brain response to a single event is a measure of its novelty or innovation rather than of its physical intensity or amplitude.

This observation probably accounts for the apparently (and literally) paradoxical effect described as “sub-liminal perception”. This phenomenon has attracted great interest as a means of “thought control” in advertising or other propaganda; it involves a presentation of a selected stimulus (such as an exhortation to buy a particular product or vote for a certain candidate) at a level of intensity, or for a brief period, below the threshold of “conscious recognition”. Stimuli at “sub-threshold” levels have in fact been found to influence the statistical behavior of normal human beings without their being aware of the nature or moment of the stimulus. These effects are so subtle and could be so sinister that attempts at sub-liminal influence have been banned in many countries by advertising associations. The paradox of influence by subthreshold stimuli is resolved by considerations of threshold in terms not of intensity or duration but of unexpectedness or innovation. The mechanisms responsible for distributing signals to the non specific brain regions constantly compute the information-content of the signals and suppress those that are redundant while novel or surprising signals, however small, are transmitted with amplified intensity.

The effects of information selection are even more involved when the signals are part of a complex pattern of association. When response to a given signal has vanished with habituation it may be restored, not only by a change in the original signal itself but also by association of this with another subsequent signal. The response to the paired signals may also habituate, but if the second signal is an “unconditional” stimulus for action (that is, to gratify an appetite, gain a reward or avoid a penalty) habituation does not occur and in fact the first, conditional response shows progressive “contingent amplification”. At the same time the response to the second, “unconditional” stimulus, even if this be more intense than the conditional one, shows contingent occlusion.

The representation of this situation in real life is quite familiar. In driving an automobile one learns first to avoid obstacles, and this is based on the unconditional withdrawal reflex which prevents us from colliding with obstacles in any situation. The next stage is to learn to avoid symbolic obstacles—to stop at the red traffic lights for example. The red light is not harmful in itself, it implies the probability of collision, reinforced by police action—it is a conditional stimulus. The action of stopping at an intersection is determined not by the traffic, but by the light. When the light changes to green however, the primary defensive action is restored and the real obstacles must be avoided. The same effect is seen in the brain; when a conditional warning stimulus which has shown contingent amplification is withdrawn the unconditional stimulus which has been occluded, reappears at full size at once. The brain retains the capacity for unconditional training.

A particularly interesting aspect of these observations is the evidence for a dynamic short-term memory system, and here again the resemblance of living processes to those predicted theoretically from cybernetic models is quite startling. In CORA, the third-grade memory, which stores information about significant associations, consists of an electronic oscillatory resonant circuit in which an oscillation is initiated only when the significance of associated events surpasses the arbitrary threshold of significance. This oscillation decays slowly if the association is not repeated or reinforced. Quite recently it was discovered that in records of brain responses to visual stimuli an oscillation appears following the primary response, but only when the visual stimulus has acquired significance, either by irregularity or, more often, by association with unconditional stimuli to which the subject responds with an operant action. These after-rhythms are so precise and constant that they may also be operating as a brain-clock, regulating the time sequence of events in an orderly and effective pattern.

The relation of the conditional responses in the brain and their after-rhythms to the intrinsic brain rhythms, particularly the alpha rhythms, is still a challenging problem from which much may be learned not only about the living brain but also about the design of intelligent machines. Wiener in his book on Non-Linear Problems and in the second edition of Cybernetics has approached this question from the theoretical standpoint but the facts are even more confusing than he indicates. In the first place many normal people show no signs of alpha rhythms at all, so whatever function these rhythms mediate must be associated with their suppression rather than their presence. This is not as unreasonable as it sounds, for the alpha rhythms do in fact disappear in states of functional alertness and attention, and the brains of people without alpha rhythms seem to be involved perpetually in the manipulation of visual images. Secondly, the alpha rhythms are usually complex; three or four linked but independent rhythms can often be identified in different brain regions. Third, the alpha waves are not stationary—they sweep over or through the brain. In normal people the direction of sweep is usually from the front to back during rest with the eyes shut, but the pattern is broken up and complicated by mental or visual activity. In patients with mental disturbances of the neurotic type the direction of sweep is often reversed back-to-front, and this effect has been seen for a period of a few months in normal people under severe mental stress. Apart from major disturbances of this sort, the frequency and phase relations of the alpha process are so constant, even in variations of age and temperature, that one is tempted to consider them as ultra-stabilized and to search for a purpose or primary function for them.

Any commonplace analogy is probably far too simple and ingenuous to do more than suggest more relevant experiments, but one mechanism that seems to have similar properties is the traffic-operated signal network on a railways or road system. Such signals are in the reflexive or feedback class since they control traffic but are also controlled by it. In the application of this system to urban road traffic the signals have an intrinsic rhythm when traffic is heavy, so that traffic flows alternately from one direction and then orthogonally. The signals along any main thoroughfare are also synchronized with a phase-delay, so that for a period the traffic can proceed steadily at a limited pace from one end to the other without hold up. Cross traffic at intersections is held up while the “green” period lasts, but the orthogonal streets may also have phased control-signals so that when the main street “green” is over, the cross traffic also may proceed across many intersections at a certain speed. Now, when the traffic is light in one direction it is wasteful to have the same rhythm and phase of signal as when it is heavy, and the traffic-operated system ensures that a crossing is barred by a red light until a certain number of vehicles have operated the road-pad, when the crossing is opened and the phase-locked sequence is initiated for that street in its turn. When there is little or no traffic the time-sequence will operate alone, providing rhythmic waves of potential inhibition and facilitation which would be seen by a viewer as waves of green and red light sweeping rhythmically along the traffic routes. When the traffic increased again the rhythmic sweep would be interrupted, as the alpha waves in the brain cease, since each vehicle would trigger its own free-way. The effect on the traffic (in the brain the actual volleys and trains of impulses conveying information) would be to divide the chaotic inflow into packets of vehicle alternately stationary, waiting for the green light, and then travelling at a constant speed until the end of the open route. This rather detailed description of a familiar—and sometimes exasperating—scene is presented as an example of the basic principles of traffic control which may be as important in the living brain as in a busy city.

Applying the principle of seeking purpose where reflexive relations have been identified, we may ask, what is the purpose of the system—what is actually being regulated or stabilized? In the city traffic the conditions desired are that every vehicle should have an equal chance of reaching its destination at the expected time. We must remember that every vehicle has in effect a rendezvous, an appointment in time and place. Applying the same interpretation to the brain as an information distributing machine, it is equally true that every signal and vehicle in the form of a train of nerve impulses, has a provenance and a destination., an appointment with some other information-packet. The systematic grouping and routing of these information-packets may well be the function of intrinsic brain rhythms: their effect will be to limit the maximum rate of action, but avoid complete breakdown by chaotic interaction of cross-streams. In brains that exhibit no intrinsic rhythms the inference would be that all the traffic control devices are being traffic operated and this system over-rides the time-sequence process, while in brains with persistent alpha rhythms the intrinsic time-cycles are pre-potent and all signal-vehicles are constrained to follow this procedure. We know from our acquaintance with actual traffic systems in various cities that both the strict time-phasing and the traffic operated system can work well, and that various types of combination of both also work. We also know that above a certain traffic density any of these systems may break down and that failure-to-safety can be assured most easily by having all controls near the center of the jam set to red while the peripheral traffic filters away. Bearing in mind that in the brain all these controls and filters are likely to be statistical rather than absolute, we may lengthen the conjecture to include the sweep-reversal seen in neurotic patients and normal under stress as a failure-to-safety device, holding up neuronic traffic but reducing the probability of collision or futile encounter.

These comparisons illustrate how observations on systems as diverse as the dark world within our skulls, the flashing lights of a busy city, the meanderings of an artificial animal and the lonely terror of a mental ward may illuminate one another to provide a general idea which each in turn may benefit. Cybernetic claims have been derided because in many cases they seem to provide merely blinding of the obvious, and indeed the discoveries and inventions in cybernetic engineering have often been anticipated either by the evolution of living systems or by common sense. Even in the most trivial situations however, the cybernetic approach can both unify apparently remote concepts and dissolve away the aura of transcendental influence that surrounds such terms as “intelligence”, “purpose”, “thinking”, “personality”, “causality” and “free will”. We are still in the age of cybernetic amateurs who are content to test their skill with machines that play games and imitate the simplest vital functions. The next generation of professional steersmen—who are already maturing in the great technical Institutes of many countries—will offer even more profound and revolutionary principles and contrivances to technocratic culture. One of the most significant struggles will certainly be over the cybernetics of cybernetics in society—who is to control whom and with what purpose?

Democratic society as defined in the West (that is, universal suffrage, secret ballots, two or three political parties, public debate, decision by majority in two houses, moderating influence of President or constitutional Monarch) is an excellent example of a cybernetic evolution, perhaps more steersman-like than even Ampère would have imagined. In some ways Western democracy is remarkably sophisticated. The suffrage system (one man—one vote and election by bare majority) may be defined as a binary opinion amplifier with statistical stabilization. However strong and widely held an opinion may be, only one candidate can be elected in any constituency. On the other hand the coupling to the legislative assembly and reflexive action of the legislation on the voters is generally slightly positive, leading to a slow control, “by the people and for the people” is a precise embodiment of the cybernetic axiom that in a reflexive system causality disappears as purpose emerges. One of the most delicate adjustments in Western democracy is the timing of elections to match the natural period of oscillation. The American Constitution is a perfect example of phase control since the President is elected every four years and one third of the senate every two years. This constitutes introduction of a small component at the second harmonic frequency of the pulse repetition-rate, leading to an effect similar to rectification of an alternating pulse waveform. Politically, the effect of this is to diminish the probability of violent swings of policy from one extreme to the other; a period of relative tranquility corresponding to two or four presidential terms will tend to be followed by a marked deflection in one direction but the opposing swing to the other side will again be diminished by the second harmonic rectification. This effect is acknowledged in practice by the traditional conflict between Executive and Legislative which is of course quite different from the system in other countries where the Prime Minister is necessarily a member of the majority party and the President or Monarch has a minimal influence in policy decisions. The ingenuity of the American Constitution reflects the cybernetic insight of its originators and its survival with only minor amendments since 1787 indicates its basic stability. If the full cybernetic implications of this unique specification for dynamic equilibrium had been realized at its inception, even the genius of Benjamin Franklin might have recoiled from the complexities of its checks and balances.

At the other extreme of political organization, the autocratic tyranny or dictatorship also displays cybernetic qualities of universal interest. In place of an elected assembly the dictator must rely on a spy-network to provide information about popular feeling and economic trends. As long as the political police are unobtrusive and act merely as opinion samplers the system can be stable since the autocrat can regulate his edicts by reference to popular opinion which in turn is influenced by the edicts. Serious instability in an autocratic regime arises when the political police actively suppress expressions of opinion by arrest and mass execution. This destroys the sources of information and ensures an explosive evolution. The principle of innovation applies here as it does in the brain; in political evolution it is the unexpected that matters and since by definition the unexpected will appear first on a small scale, minority views must be constantly sampled since among them will be found the earliest harbingers of future change. In the brain, the responses evoked by novel stimuli involve no more than one percent of the available nerve cells, but this minority response is a clear indication of the likely trend in behavior. Similarly, in the political system the majority is always wrong in the sense that it preserves the impression of the past rather than a plan for the future. The Autocrat must therefore take great care that the ears of his henchmen tuned to dreams and whispers. This suggestion, that the majority is always wrong has important implications for electoral democratic systems also; minority views are represented In free election, but if these result in the sub-division of parties into many splinter groups the operation of the legislative assembly becomes sluggish and inconsistent. The more effective arrangement is for the growth of a minority view to influence the bias of the opinion amplifier, that is to modify the policy of a major party.

In comparing social with cerebral organizations one important feature of the brain should be kept in mind; we find no boss in the brain, no oligarchic ganglion or glandular Big Brother. Within our heads our very lives depend on equality of opportunity, on specialization with versatility, on free communication and just restraint, a freedom without interference. Here too local minorities can and do control their own means of production and expression in free and equal intercourse with their neighbors. If we must identify biological and political systems our brains would seem to illustrate the capacity and limitations of an anarcho-syndicalist community.